Author: Jordi Pages < jordi.pages@pal-robotics.com >

Maintainer: Jordi Pages < jordi.pages@pal-robotics.com >

Support: tiago-support@pal-robotics.com

Source: https://github.com/pal-robotics/tiago_tutorials.git

| |

Face detection (C++)

Description: Example of ROS node embedding OpenCV's face detector.Keywords: OpenCV

Tutorial Level: ADVANCED

Next Tutorial: Planar object detection and pose estimation

Purpose

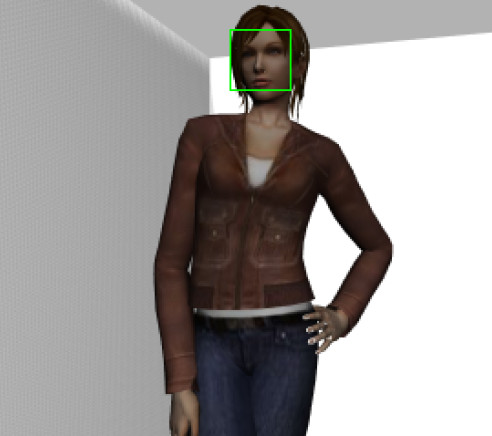

This tutorial presents a ROS node that subscribes to an image topic and applies the OpenCV face detector based on an Adaboost cascade of Haar features. The node publishes ROIs of the detections and a debug image showing the processed image with the ROIs likely to contain faces.

Pre-Requisites

First, make sure that the tutorials are properly installed along with the TIAGo simulation, as shown in the Tutorials Installation Section.

Download the face detector

In order to execute the demo first we need to download the source code of the face detector. Run the following instructions:

cd ~/tiago_public_ws/src git clone https://github.com/pal-robotics/pal_face_detector_opencv.git

Building the workspace

Now we need to build the workspace

cd ~/tiago_public_ws catkin build

Execution

Open four consoles and in each one source the workspace

cd ~/tiago_public_ws source ./devel/setup.bash

In the first console launch the simulation

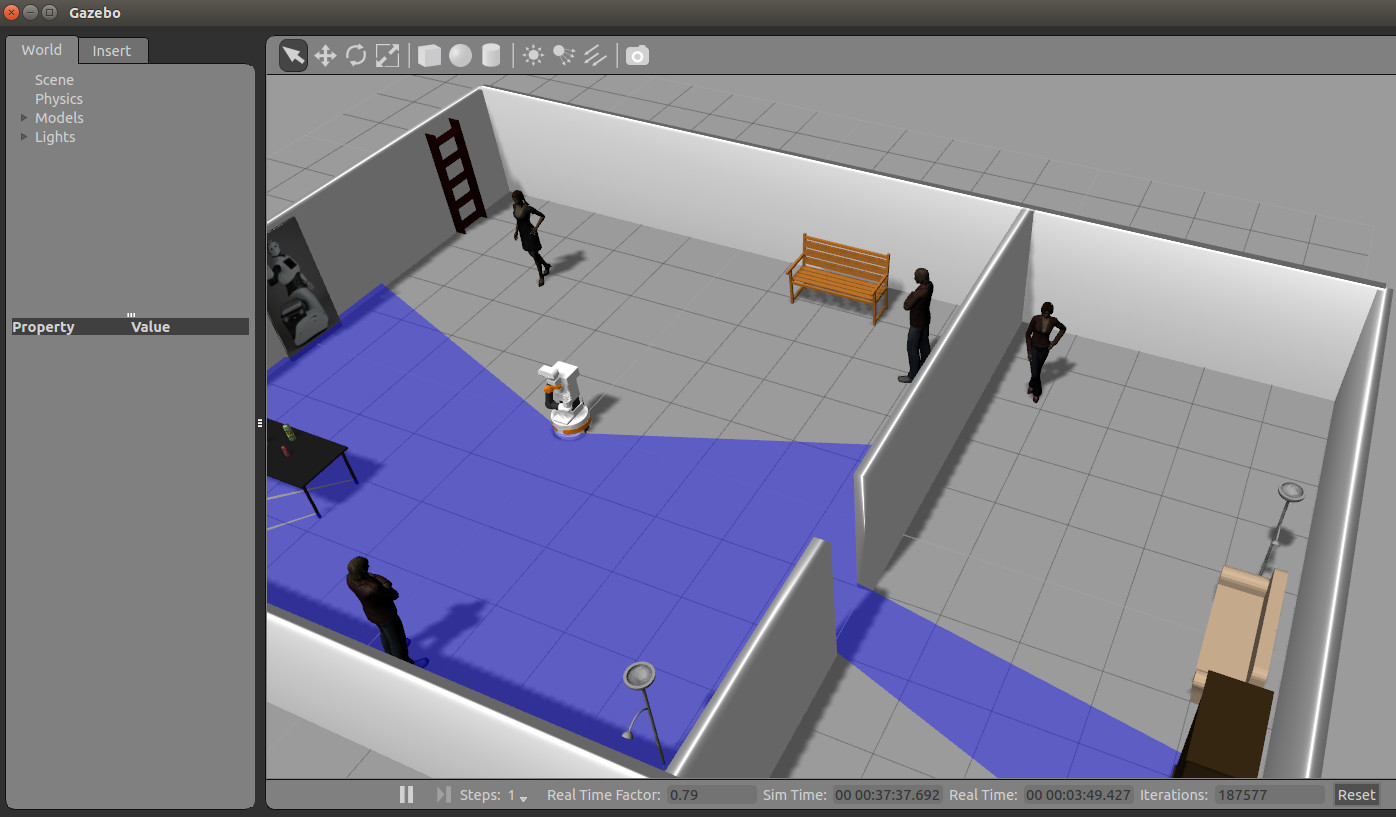

roslaunch tiago_gazebo tiago_gazebo.launch public_sim:=true end_effector:=pal-gripper world:=simple_office_with_people

Note that in this simulation world there are several person models

In the second console run the face detector node as follows

roslaunch pal_face_detector_opencv detector.launch image:=/xtion/rgb/image_raw

Moving around

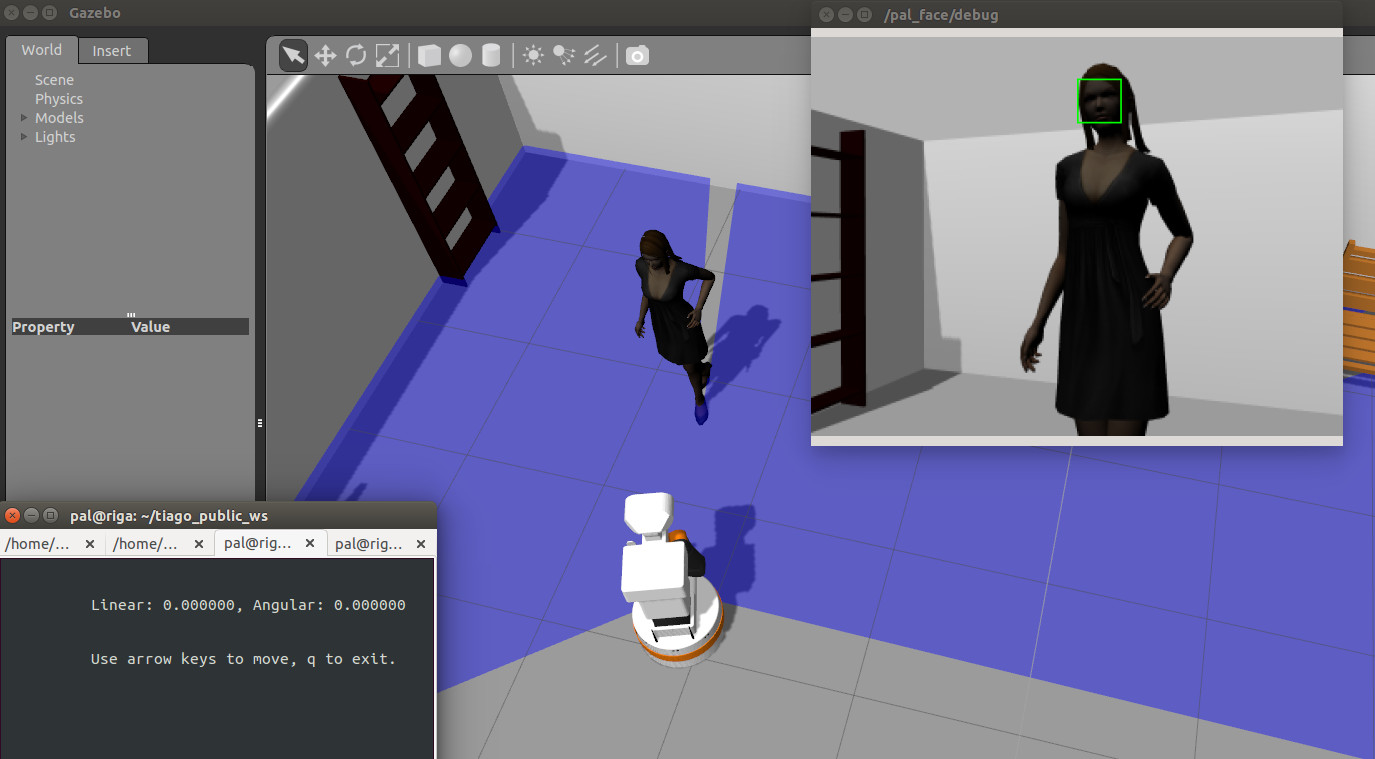

In order to detect faces we need that the robot approaches the persons. In the third console run the key_teleop node as follows

rosrun key_teleop key_teleop.py

Then, using the arrow keys of the keyboard we can make TIAGo move around the simulated world and approach the different persons.

Watching the detections

In the fourth console we may run an image visualizer to see the face detections

sudo apt update sudo apt install ros-melodic-image-view rosrun image_view image_view image:=/pal_face/debug

Or

rosrun rqt_image_view rqt_image_view

And choose /pal_face/debug in the scrolling menu

Note that faces detected are stroked with a ROI. The detections are not only represented in the /pal_face/debug Image topic but also in /pal_face/faces, which contains a vector with the image ROIs of all the detected faces. In order to see the contents of the topic we may use

rostopic echo -n 1 /pal_face/faces

which will prompt one message of the topic that when a face is detected will look like

header: seq: 0 stamp: secs: 2582 nsecs: 37000000 frame_id: xtion_rgb_optical_frame faces: - x: 320 y: 50 width: 56 height: 56 eyesLocated: False leftEyeX: 0 leftEyeY: 0 rightEyeX: 0 rightEyeY: 0 position: x: 0.0 y: 0.0 z: 0.0 name: '' confidence: 0.0 expression: '' expression_confidence: 0.0 emotion_anger_confidence: 0.0 emotion_disgust_confidence: 0.0 emotion_fear_confidence: 0.0 emotion_happiness_confidence: 0.0 emotion_neutral_confidence: 0.0 emotion_sadness_confidence: 0.0 emotion_surprise_confidence: 0.0 camera_pose: header: seq: 0 stamp: secs: 0 nsecs: 0 frame_id: '' child_frame_id: '' transform: translation: x: 0.0 y: 0.0 z: 0.0 rotation: x: 0.0 y: 0.0 z: 0.0 w: 0.0 ---