Planet ROS

Planet ROS - http://planet.ros.org

Planet ROS - http://planet.ros.org![]() http://planet.ros.org

http://planet.ros.org

ROS Discourse General: Controlling the Nero Robotic Arm with OpenClaw

Controlling the Nero Robotic Arm with OpenClaw

As a popular open-source project, OpenClaw has become a highlight in the robotic arm control field with its intuitive operation and strong adaptability. It enables full end-to-end linkage between AI commands and device execution, greatly lowering the barrier for robotic arm control. This article focuses on practical implementation. Combined with the pyAgxArm SDK, we will guide you through the download, installation, and configuration of OpenClaw to achieve efficient control of the NERO 7-axis robotic arm.

Seamless AI Control: AgileX NERO 7-DoF Arm with OpenClaw

Download and Install OpenClaw

- Open the OpenClaw official website: https://openclaw.ai/

- Locate the

Quick Starttab and execute the one-click installation script

Start Configuring OpenClaw

- It is recommended to select the QWEN model

- Select all hooks

- Open the web interface

Teach OpenClaw the Skill and Rules for Controlling the Robotic Arm

-

Create an

agx_arm_codegendirectory in the skill folder, then create the following skill files: -

SKILLS.md

---

name: agx-arm-codegen

description: Guide OpenClaw to generate pyAgxArm-based robotic arm control code from user natural language. When users describe robotic arm movements with prompts and existing scripts cannot directly meet the requirements, automatically organize and generate executable Python scripts based on the APIs and examples provided by this skill.

metadata:

{

"openclaw":

{

"emoji": "烙",

"requires": { "bins": ["python3", "pip3"] },

},

}

---

## Function Overview

- This skill is used to **guide OpenClaw to generate** executable pyAgxArm control code (Python scripts) based on user natural language descriptions, rather than just calling existing CLIs.

- Reference SDK: pyAgxArm ([GitHub](https://github.com/agilexrobotics/pyAgxArm)); Reference example: `pyAgxArm/demos/nero/test1.py`.

## When to Use This Skill

- Users say "Write code to control the robotic arm", "Generate a control script based on my description", "Make the robotic arm perform multiple actions in sequence", etc.

- Users explicitly request to "generate Python code" or "provide a runnable script" to control AgileX robotic arms such as Nero/Piper.

## Generate Code Using This Skill

- Based on user prompts, combine the APIs and templates in `references/pyagxarm-api.md` of this skill to generate a complete, runnable Python script.

- After generation, explain: the script needs to run in an environment with pyAgxArm and python-can installed, and CAN must be activated and the robotic arm powered on; remind users to pay attention to safety (no one in the workspace, small-scale testing first is recommended).

## Rules for Generating Code

1. **Connection and Configuration**

- Use `create_agx_arm_config(robot="nero", comm="can", channel="can0", interface="socketcan")` to create a configuration (Nero example; Piper can use `robot="piper"`).

- Use `AgxArmFactory.create_arm(robot_cfg)` to create a robotic arm instance, then `robot.connect()` to establish a connection.

2. **Enabling and Pre-Motion**

- CRITICAL: The robot MUST BE ENABLED before switching modes. If the robot is in a disabled state, you cannot switch modes.

- Switch to normal mode before movement, then enable: `robot.set_normal_mode()`, then poll `robot.enable()` until successful; you can set `robot.set_speed_percent(100)`.

- Motion modes: Whenever using move_* or needing to switch to * mode, explicitly set `robot.set_motion_mode(robot.MOTION_MODE.J)` (Joint), `P` (Point-to-Point), `L` (Linear), `C` (Circular), `JS` (Joint Quick Response, use with caution).

3. **Motion Interfaces and Units**

- Joint motion: `robot.move_j([j1, j2, ..., j7])`, unit is **radians**, Nero has 7 joints.

- Cartesian: `robot.move_p(pose)` / `robot.move_l(pose)`, pose is `[x, y, z, roll, pitch, yaw]`, position unit is **meters**, attitude is **radians**.

- Circular: `robot.move_c(start_pose, mid_pose, end_pose)`, each pose is 6 floating-point numbers.

- CRITICAL: All movement commands (move_j, move_js, move_mit, move_c, move_l, move_p) must be used in normal mode

- After motion completion, poll `robot.get_arm_status().msg.motion_status == 0` or encapsulate `wait_motion_done(robot, timeout=...)` before executing the next step.

4. **Mode Switching**

- Switching modes (master, slave, normal) requires 1s delay before and after the mode switch

- Use `robot.set_normal_mode()` to set normal mode

- Use `robot.set_master_mode()` to set master mode

- Use `robot.set_slave_mode()` to set slave mode

- CRITICAL: Enable the robot FIRST with `robot.enable()` BEFORE switching modes

5. **Safety and Conclusion**

- In the generated script, note: confirm workspace safety before execution; small-scale movement is recommended for the first time; use physical emergency stop or `robot.electronic_emergency_stop()` / `robot.disable()` in case of emergency.

- If the user requests "disable after completion", call `robot.disable()` at the end of the script.

6. **Implementation Details**

- When waiting for motion to complete, use shorter timeout (2-3 seconds)

- After each mechanical arm operation, add a small sleep (0.01 seconds)

- Motion completion detection: `robot.get_arm_status().msg.motion_status == 0` (not == 1)

## Reference Files

- **API and Minimal Runnable Template**: `references/pyagxarm-api.md`

When generating code, refer to the interfaces and code snippets in this file to ensure consistency with pyAgxArm and test1.py usage.

## Safety Notes

- The generated code will drive a physical robotic arm. Users must be reminded: confirm no personnel or obstacles in the workspace before execution; it is recommended to test with small movements and low speeds first.

- High-risk modes (such as `move_js`, `move_mit`) should be marked with risks in code comments or user explanations, and it is recommended to use them only after understanding the consequences.

- This skill is only responsible for "guiding code generation" and does not directly execute movements; users need to prepare the actual running environment, CAN activation, and pyAgxArm installation by themselves (refer to environment preparation in the agx-arm skill).

- pyagxarm-api.md

# pyAgxArm API Quick Reference & Minimal Runnable Template

For reference when OpenClaw generates robotic arm control code from user natural language. SDK source: pyAgxArm ([GitHub](https://github.com/agilexrobotics/pyAgxArm)); Example reference: `pyAgxArm/demos/nero/test1.py`.

## 1. Connection and Configuration

```python

from pyAgxArm import create_agx_arm_config, AgxArmFactory

# Configuration: robot options - nero / piper / piper_h / piper_l / piper_x; channel e.g. can0

robot_cfg = create_agx_arm_config(

robot="nero",

comm="can",

channel="can0",

interface="socketcan",

)

robot = AgxArmFactory.create_arm(robot_cfg)

robot.connect()

create_agx_arm_config(robot, comm="can", channel="can0", interface="socketcan", **kwargs): Create configuration dictionary; CAN-related parameters are passed via kwargs (e.g. channel, interface).AgxArmFactory.create_arm(config): Return robotic arm driver instance.robot.connect(): Establish CAN connection and start reading thread.

2. Enabling and Modes

robot.set_normal_mode() # Normal mode (single arm control)

# Enable: poll until successful

while not robot.enable():

time.sleep(0.01)

robot.set_speed_percent(100) # Motion speed percentage 0–100

# Disable

while not robot.disable():

time.sleep(0.01)

- Master/Slave modes (Nero/Piper, etc.):

robot.set_master_mode()(zero-force teaching),robot.set_slave_mode()(follow master arm).

3. Motion Modes and Interfaces

| Mode | Constant | Interface | Description |

|---|---|---|---|

| Joint Position Speed | robot.MOTION_MODE.J |

robot.move_j([j1..j7]) |

7 joint angles (radians), with smoothing |

| Joint Quick Response | robot.MOTION_MODE.JS |

robot.move_js([j1..j7]) |

No smoothing, use with caution |

| Point-to-Point | robot.MOTION_MODE.P |

robot.move_p([x,y,z,roll,pitch,yaw]) |

Cartesian pose, meters/radians |

| Linear | robot.MOTION_MODE.L |

robot.move_l([x,y,z,roll,pitch,yaw]) |

Linear trajectory |

| Circular | robot.MOTION_MODE.C |

robot.move_c(start_pose, mid_pose, end_pose) |

Each pose is 6 floating-point numbers |

- Units: Joint angles are in radians; Cartesian pose is in meters (x,y,z) and radians (roll, pitch, yaw).

- Nero has 7 joints; Piper has 6 joints, the number of parameters for

move_j/move_jsmust match the model.

Example (Joint Motion + Wait for Completion):

import time

def wait_motion_done(robot, timeout: float = 3.0, poll_interval: float = 0.1) -> bool: # Shorter timeout (2-3s)

time.sleep(0.5)

start_t = time.monotonic()

while True:

status = robot.get_arm_status()

if status is not None and getattr(status.msg, "motion_status", None) == 0:

return True

if time.monotonic() - start_t > timeout:

return False

time.sleep(poll_interval)

robot.set_motion_mode(robot.MOTION_MODE.J)

robot.move_j([0.01, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0])

wait_motion_done(robot, timeout=3.0) # Shorter timeout

4. Read Status

robot.get_joint_angles(): Current joint angles (return value is array when with.msgattribute).robot.get_flange_pose(): Current flange pose[x, y, z, roll, pitch, yaw].robot.get_arm_status(): Motion status, etc.;status.msg.motion_status == 0indicates motion completion.- Note: Detect motion completion with

robot.get_arm_status().msg.motion_status == 0(not == 1)

5. Others

- Homing:

robot.move_j([0] * 7)(7 joints for Nero). - Emergency Stop:

robot.electronic_emergency_stop(); recovery requiresrobot.reset(). - MIT Impedance/Torque Control (Advanced):

robot.set_motion_mode(robot.MOTION_MODE.MIT),robot.move_mit(joint_index, p_des, v_des, kp, kd, t_ff), parameter ranges refer to SDK, use with caution.

6. Minimal Runnable Template (Extend based on this when generating code)

#!/usr/bin/env python3

import time

from pyAgxArm import create_agx_arm_config, AgxArmFactory

def wait_motion_done(robot, timeout: float = 3.0, poll_interval: float = 0.1) -> bool: # Shorter timeout (2-3s)

time.sleep(0.5)

start_t = time.monotonic()

while True:

status = robot.get_arm_status()

if status is not None and getattr(status.msg, "motion_status", None) == 0:

return True

if time.monotonic() - start_t > timeout:

return False

time.sleep(poll_interval)

def main():

robot_cfg = create_agx_arm_config(

robot="nero",

comm="can",

channel="can0",

interface="socketcan",

)

robot = AgxArmFactory.create_arm(robot_cfg)

robot.connect()

# Mode switching requires 1s delay before and after

time.sleep(1) # 1s delay before mode switch

robot.set_normal_mode()

time.sleep(1) # 1s delay after mode switch

# CRITICAL: The robot MUST BE ENABLED before switching modes

while not robot.enable():

time.sleep(0.01)

robot.set_speed_percent(80)

# After each mechanical arm operation, add a small sleep (0.01 seconds)

# CRITICAL: All movement commands must be used in normal mode

robot.set_motion_mode(robot.MOTION_MODE.J)

robot.move_j([0.05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0])

time.sleep(0.01) # Small delay after move command

wait_motion_done(robot, timeout=3.0) # Shorter timeout

# Optional: Disable before exit

# while not robot.disable():

# time.sleep(0.01)

if __name__ == "__main__":

main()

When generating code, replace or add motion steps (move_j / move_p / move_l / move_c, etc.) according to user descriptions, and keep consistency in connection, enabling, wait_motion_done and units (radians/meters).

- Next, teach OpenClaw this skill

After configuring the robotic arm CAN communication and Python environment, OpenClaw can automatically call the SDK driver to generate control code and control the robotic arm

1 post - 1 participant

ROS Discourse General: LinkForge v1.3.0 — The Linter & Bridge for Robotics

Hi everyone! ![]()

LinkForge v1.3.0 was just released! But more than a release announcement, I want to take a moment to share the bigger vision of where this project is going — because it’s grown far beyond a Blender plugin.

The Vision

The Vision

LinkForge is not just a URDF exporter for Blender. The architecture is intentionally built as a Hexagonal Core, fully decoupled from any single 3D host or output format.

The mission is simple:

Bridge the gap between creative 3D design and high-fidelity robotics engineering.

Design Systems (Blender, FreeCAD, Fusion 360) ➜ LinkForge Core ➜ Simulation & Production (ROS 2, MuJoCo, Gazebo, Isaac Sim)

Because in robotics, Physics is Truth. Every inertia tensor, every joint limit, every sensor placement should be mathematically correct before it ever reaches a simulator.

What’s New in v1.3.0?

What’s New in v1.3.0?

Performance

Performance

-

Depsgraph caching and NumPy-accelerated inertia calculations for large robots

-

Formal Kinematic Graph engine for cycle detection and topology validation

ros2_control Intelligence

ros2_control Intelligence

-

Joint sync, XACRO modularity improvements, and 100% core test coverage for the control pipeline

-

ros2_controlhardware interfaces now auto-generated from the dashboard with enhanced joint model (safety/calibration support)

Key Bug Fixes

Key Bug Fixes

-

Resolved XACRO import failures for

ros2-style robot descriptions (e.g.,$(find pkg)/...macro resolution) -

Robust mesh import cleanup and improved XACRO empty-placeholder pruning

Component Search

Component Search

- Added a component search filter to the component browser — makes large, multi-link robots much easier to navigate

Looking for Contributors

Looking for Contributors

The upcoming roadmap includes SRDF Support, the linkforge_ros package, and a Composer API for modular robot assemblies.

If you work in ROS 2, enjoy Python or Rust, or have ideas on how to improve the URDF/XACRO workflow, come say hi:

What is your biggest URDF/XACRO pain point today? I’d love to know what the community needs most as we plan the next milestone! ![]()

![]()

1 post - 1 participant

ROS Discourse General: Tom Ryden talks robotics trends at Boston Robot Hackers on March 5!

We are excited to share news about the next monthly meeting of the Boston Robot Hackers! Check it out If you are in the Boston area and please register for the event.

Where: Artisans Asylum, 96 Holton Street, Boston, MA 02135

When: Thursday March 5, 7:00-9:00pm

Speaker: Tom Ryden of Mass Robotics

If you are into Robotics (and, by definition, you are, given you are reading this!) this promises to be a very interesting talk! Tom will start with an overview of MassRobotics and then get into what the current trends are in the robotics market: what problems are start-ups addressing, how the fundraising market is today, and where the investment dollars are going.

And if you cannot make it, still consider joining our organization!

1 post - 1 participant

ROS Discourse General: Intrinsic joining Google as a a distinct robotics and AI unit

Hi Everyone,

As you may have seen in the recent blog post, Intrinsic is joining Google as a distinct robotics and AI unit. Specifically Intrinsic’s platform will bring a new “infrastructural bridge” between Google’s frontier AI research (such as the AI coming from teams at Gemini and DeepMind) and the practical, high-stakes requirements of industrial manufacturing, which is Intrinsic’s focus. This decision will allow our team to continue building the Intrinsic platform, and operate in a very similar way to before. Our commercial mandate remains the same, as does our focus on delivering intelligent solutions for our customers.

Intrinsic remains dedicated to the commitments we’ve made to the open source community, to ROS, Gazebo and Open-RMF (including Lyrical and Kura release roadmaps) and deepening our platform integrations with ROS over time. We’re also very excited about the AI for Industry Challenge this year, which is organized with the team at Open Robotics and has thousands of registrants so far.

From the community’s perspective we are expecting minimal disruption, if any, and we look forward to showing and sharing more news at ROSCon in Toronto later this year.

4 posts - 4 participants

ROS Discourse General: ROS Lyrical Release Working Group

The ROS Lyrical Release Working Group will have its first meeting Fri, Feb 27, 2026 7:00 PM UTC→Fri, Feb 27, 2026 8:00 PM UTC.

Want to come? Give feedback on the time here: Meeting time: ROS Lyrical Release WG

Meeting link: https://openrobotics-org.zoom.us/meetings/81224698184/invitations?signature=SrUjwX951phQQqx25bNfAA-MFEpABNsKY1vAAWfp91s

Notes and Agenda: https://docs.google.com/document/d/1lkilmVulAUF1qVRsmimMa1cJtO2jOLoGy75itOCwc78/edit?usp=sharing

Agenda:

- Identify what’s being worked on, and who is working on it

- Make a list of pull requests that need review

- Discuss a proposed release timeline

- Open discussion about what needs to be worked on, and what decisions need to be made

1 post - 1 participant

ROS Discourse General: Meeting Summary for Accelerated Transport Working Group 02/18/2026

In this meeting we discussed

- Different options that the solution can provide for backward compatibility

- Support in rclpy

- Support in rclc

- Break ABI/API

Remember that the meeting is happening every week to push this feature in to Lyrical Luth. Please check the Open Source Robotics Foundation official events to join the next meeting

Join the #Accelerated Memory Transport Working Group to discuss more details on Zulip

1 post - 1 participant

ROS Discourse General: Meeting Summary for Accelerated Transport Working Group 02/11/2026

We had the first meeting in the Accelerated Memory Transport WG. The meeting focused on discussing a new prototype presented by Karsten and CY from NVIDIA.

We discussed some topics:

- How to handle large messages between ROS nodes, particularly for image data, tensors, and point clouds, by introducing custom buffer types that can be mapped to non-CPU memory.

- Backend loading and compatibility, leading to a discussion about whether the feature should be opt-in or opt-out, with Michael suggesting it could be made default in a future release after initial adoption.

- The group also discussed wire compatibility concerns and potential workarounds, including using maximum length values or special annotations in message types.

- Join the #Accelerated Memory Transport Working Group to discuss more details on Zulip

Please check the Open Source Robotics Foundation official events to join the next meeting

1 post - 1 participant

ROS Discourse General: Transitive Core Concepts — #1: Full-stack Packages: robot + cloud + web

Working with nine different robotics companies over the course of 10 years has taught us a thing or two about designing robotic full-stack architectures. All this experience went into the design of Transitive, the open-source framework for full-stack robotics. We’ve started a new mini-series of blog posts where I dive into the three core concepts of the framework. Too often do we see robotic startups fall into the same pitfalls when designing their full-stack architecture (robot + cloud + web). Therefore it is important to us to share our experience and explain why we built Transitive the way we did.

In this first post you’ll learn about the need for cross-device code encapsulation, how we addressed this need in Transitive via full-stack packages, and what benefits result from this approach for growing your fleet and functionality without increasing complexity.

1 post - 1 participant

ROS Discourse General: Ros2_canopen shortcomings and improvements

During the integration of our hardware we (inmach.de) encountered some shortcomings in the ros2_canopen package which we worked around or fixed in our fork of ros2_canopen. We’d like to get these changes into the upstream repo so that everyone can profit from them.

The major shortcomings we found and think should and could be improved are:

- The CIA402 Driver only supports 1 axle but the standard allows up to 8 axles (our hardware supports 4 axles)

- The

canopen_ros2_controlsystems can not handle different types of CAN devices on the same CAN bus so one has to write an own system in this case. This get’s even worse if a node with a custom api should be used. - Because

ros2_controljust reuses the normalNodeimplementation there are always also the ROS topics/services available which can easily bypass theros2_controlcontrollers. - The use of a template for

ros2_canopen::node_interfaces::NodeCanopenDriverto handle the case ofNodeandLifecycleNodehas probably historic reasons. The current proposed ROS way to handle this case is to userclcpp::node_interfaces.

With this post I’d like to start a discussion with the ROS community and the maintainers (@c_h_s, @ipa-vsp) of ros2_canopen about other possible shortcomings and what needs and can be done to improve the ros2_canopen stack. So that we together can make it even better in the years to come.

5 posts - 3 participants

ROS Discourse General: Predictability of zero-copy message transport

Hey! I’m looking to improve my ROS 2 code performance with the usage of zero-copy transfer for large messages.

I’ve been under the impression that simply composing any composable node into a container, and setting “use_intra_process_comms” to True would lead into zero-copy transfer. But after experimenting and going through multiple tutorials, design docs, and discussions, that doesn’t seem to be the case.

I wanted to create this thread to write down some of my questions, in the hopes of them being helpful for improving the documentation, and to get a better understanding of the zero-copy edge cases. I’m also curious to hear if there are already ways to easily verify that the zero-copy transfer is happening.

To my understanding, it looks like there are a bunch of different things that can have an influence if zero-copy happens or not:

- Pointer type: The choice of SharedPtr, UniquePtr, etc. seems to affect on if zero-copy really happens or not [1], [2]

- Number of subscribers: If we have many subscriptions to the same topic, some of the subscriptions might be actually creating a copy of the message [1]

- QoS: Some of the quality of service types have been at least in the past unsupported [3]

- RMW implementation: At least in the past, the middleware choice has played a role. How it is nowadays? How about with Zenoh? [3]

- ROS Distribution version: Are there differences between existing distros (Humble to Rolling?)

- Component container type: Based on my past experimentation, there seems to be a difference between the container type:

component_containervs._mtvs._isolated. - A new inter-process subscriber outside of the composable container: What happens if we have a new inter-process subscription, outside of the composable container?

- Publisher outside of the composable container: How zero-copy behaves in situations when we for example have the publisher node outside of composable container? Can multiple subscribers still benefit from zero-copy? From my past experimenting, it seems that they can.

- Is there something else that can have an influence?

I’m looking to understand what are the cases when the zero-copy transfer really happens, and in which cases ROS just quietly falls back to copying the messages.

Many of these questions also boil down a bigger question: How can I verify if zero-copy happens, and what kind of performance benefits I’m getting from using it? All the demos I’ve seen until now simply print the memory address of the message to confirm that the zero-copy happens. I think it would be highly beneficial to have a better way directly in ROS 2 to see if zero-copy pub-sub is actually happening. Is there already a way to do that, or do you see how this could be implemented? Maybe through ros2 topic CLI?

In addition to the above questions, the tutorials and other resources still left me wondering about these ones:

- What are all the different ways of achieving zero-copy transfer? Via Loaned messages? What are the benefits of it compared to intra-process communication (IPC)? In Jazzy, loaned messages tutorial has a mention “Currently using Loaned Messages is not safe on subscription” [4]

- What are the performance gains of zero-copy? In which situations the serialization is completely avoided, and in which situations the middleware layer is skipped completely?

- What is the role of “use_intra_process_comms” parameter? I’ve sometimes observed zero-copy happening even when this parameter is set to false. What are the benefits of having it as “false” (which it is by default when nodes are composed in launch file)?

[1] ROS Jazzy Tutorial - Intra-Process-Communication

[2] Discourse Thread - Performance Characteristics: subscription callback signatures, RMW implementation, Intra-process communication (IPC)

[3] ROS 2 Design Article - Intraprocess communications

[4] ROS Jazzy Tutorial - Configure Zero Copy Loaned Messages

3 posts - 2 participants

ROS Discourse General: New kid on the block: meet Ajime, robotics CI/CD next-gen platform

Hello Roboticits!

We are building Ajime (https://ajime.io) to provide a zero-config and pipeline building, Ajime is a CI/CD drag and drop experience for edge computing & robotics. Just link your GitHub repository, we handle the build and deployment of CUDA-ready containers, manage your cloud/on-prem databases and compute resources (provide also fast hosting), and provide secure, fleet connectivity over the cloud. Easy like building lego.

Whether you’re deploying to an NVIDIA Jetson or Raspberry PI or any other linux based SOM, Ajime automates the entire pipeline—from LLM-generated Dockerfiles with sensor drivers to NVIDIA Isaac Sim validation. We’re in private beta and looking for engineers to help us kill the “dependency hell” of robotics DevOps. Check out the demo and join the waitlist at ajime.io.

2 posts - 2 participants

ROS Discourse General: Canonical Observability Stack Tryout | Cloud Robotics WG Meeting 2026-02-25

Please come and join us for this coming meeting at Wed, Feb 25, 2026 4:00 PM UTC→Wed, Feb 25, 2026 5:00 PM UTC, where we plan to deploy an example Canonical Observability Stack instance based on information from the tutorials and documentation.

We did originally plan to host this session on 2026-02-11, but unfortunately had to cancel, so the session has been moved back.

In the previous meeting, the CRWG invited Guillaume Beuzeboc from Canonical to present on the Canonical Observability Stack (COS). COS is a general observability stack for devices such as drones, robots, and IoT devices. It operates from telemetry data, and the COS team has extended it to support robot-specific use cases. If you’re interested to watch the talk, it is available on YouTube.

The meeting link for next meeting is here, and you can sign up to our calendar or our Google Group for meeting notifications or keep an eye on the Cloud Robotics Hub.

Hopefully we will see you there (and we won’t need to cancel again)!

1 post - 1 participant

ROS Discourse General: Agent ROS Bridge — Universal LLM-to-ROS bridge with auto-generated types

Hi ROS devs!

I built something to bridge the gap between AI agents and ROS robots. Instead of writing custom interfaces for every LLM integration, this gives you a universal bridge with zero boilerplate.

**Key features:**

- Auto-generates Python classes from .msg/.srv files

- One decorator = ROS action/service/actionlib

- gRPC + WebSocket APIs for remote agents

- Works with ROS1 and ROS2 (humble/jazzy tested)

- Full Docker playground with 4 examples

**The examples:**

1. **Talking Garden** — LLM monitors/controlls IoT plants via ROS topics

2. **Mars Colony** — Multi-robot coordination (excavator, builder, solar, scout)

3. **Theater Bots** — AI director + robot actors with scripted behaviors

4. **Art Studio** — Human and robot painters collaborating on canvas

**Quick start:**

```bash

pip install agent-ros-bridge

# In your Python code:

from agent_ros_bridge import ROSBridge

bridge = ROSBridge(ros_version=2)

@bridge.action(“move_to”)

def move_to(x: float, y: float):

\# Your robot movement code

pass

```

GitHub: GitHub - webthree549-bot/agent-ros-bridge

PyPI: Client Challenge

Open to issues, PRs, and feedback!

3 posts - 2 participants

ROS Discourse General: 2025 ROS Metrics Report

2025 ROS Metrics Report

2025 ROS Metrics Report.pdf (3.7 MB)

For comparison, heres the 2024 Metrics Report.

Once a year, we take a moment to evaluate the health, growth, and general well-being of the ROS community. Our goal with this annual report is to provide a relative estimate of the community’s evolution and composition to better help us plan for the future and allocate resources.

As an open-source project, we prioritize user privacy above all else. We do not track our users, and as such, this report relies on aggregate statistics from services like GitHub, Google Analytics, and download data from our various servers. While this makes data collection difficult, and the results don’t always capture the information we would like, we are happy to report that the data we have captured clearly show a thriving and rapidly growing ROS ecosystem! ![]()

2025 Report Highlights

The full report is available for download here (3.7 MB) If you would like just the highlights we’ve summarized the top line results below.

- 984,135,185 ROS packages were downloaded in 2025 representing an 85.18% increase over 2024 (this is despite missing data for July, see note below).

- Over 1,300,000 individuals / unique IPs downloaded ROS packages in October, 2025.

- ROS 2 now makes up 91.2% of all ROS downloads.

- ROS Humble currently makes up 48.53% of all ROS downloads.

- ROS Jazzy currently makes up 24.45% of all ROS downloads.

- ROS Index visitors have increased by 63.3%.

- The ROS 2 Github organization saw an 11.2% increase in contributors and a 37.59% increase in the number of pull requests.

- Discourse posts have increased by 24% and viewership has increased by 29.7%.

- Our newest ROS 2 paper (Macenski et al., 2022) had 1,929 citations, representing 90% growth year over year.

- 92.14% of Gazebo downloads are now for modern versions of Gazebo.

A Landmark Year for Community Growth

The 2025 metrics highlight a massive surge in users across almost all of our websites and servers. In the month of October 2025, ROS 2 package downloads saw a staggering 284% increase in the number of package downloads over the previous year. ROS 2 package downloads now make up the overwhelming majority of ROS package downloads (91.2% of all downloads in October 2025). This growth isn’t just from users transitioning from ROS 1 to ROS 2, most of it appears to be explosive growth in the number of ROS 2 users overall. The number of unique users / IPs downloading ROS packages grew from 843,959 in October 2024 to 1,315,867 in October of 2025, an increase of just shy of 56%!

Meanwhile, ROS 1 downloads declined slightly from 12,206,979 packages in October of 2024 to 11,590,884 in October of 2025, a decrease of slightly over 5%. The ROS Wiki, which is now at End-of-Life, saw an 8.5% decrease in users, a trend we view positively as the community migrates to modern documentation platforms and away from ROS 1. Similarly, there were only 5 questions tagged with “ROS1” on Robotics Stack Exchange in 2025, in contrast to the 1,449 questions tagged “ROS2.” On every platform, and by every metric, ROS 2 is now the dominant platform ROS development.

Our discussion platforms are also busier than ever. Annual topics on ROS Discourse rose by 40% (to 1,472), and annual posts increased by 24% (to 4,901). Overall viewership of Discourse grew by nearly 30%. Similarly our community on LinkedIn has increased by 23.9% and hovers at just shy of 200,000 followers. The only notable decrease of any ROS metric was on Robotics Stack Exchange, which has seen a -42.49% decrease in the number of questions asked. This decrease mirrors larger industry wide trends as developers turn to large language models to answer their technical questions.

ROS 2 Adoption and Industry Momentum

The shift to ROS 2 has reached a definitive milestone, with package downloads now overwhelmingly centered on ROS 2 and likely surpassing one billion per year. This massive download volume is a testament to the ROS’s utility and widespread adoption. We are especially encouraged by the growing health of the ecosystem, which now features 34,614 unique ROS packages available via Apt (an increase of 9.15% over the previous year). This growth in package availability directly translates into greater functionality and choice for our users.

The dedication of the developer community is evident in the flourishing number of public repositories on Github: 3,848 repositories are tagged with “#ROS2” (a 39% increase in 2025), alongside 8,744 public repositories tagged with “#ROS” (up 4.73% since Jan 2025), demonstrating increasing development activity. Furthermore, the relevance of ROS in industry is undeniable: our private list of ROS companies grew 26% this year to 1,579 companies, showing strong commercial validation. In the academic sphere, our canonical ROS 2 paper continues to demonstrate explosive growth with 1,929 citations (up 89.9% in 2025), confirming the platform’s role in cutting-edge research. Collectively, these metrics confirm ROS 2’s status as the established platform for the next generation of robotics development, driving significant growth across both commercial and research sectors.

Conclusion and Feedback

The data from 2025 depicts a thriving, maturing ecosystem that is increasingly centered on modern ROS 2 and modern Gazebo tools. We are immensely proud of this community’s growth and its successful shift toward next-generation robotics software! ![]()

We encourage you to dive into the full report for a more detailed breakdown of these metrics. We also encourage you to take a look at the ROS project contributor metrics published by our colleagues at the Linux Foundation for a detailed breakdown of project contribution statistics. As always, we would love to hear your thoughts on what metrics you would like to see included in future reports.

A Note on 2025 Data

Our goal with the ROS metrics report is to develop an understanding of the magnitude and direction of changes in the ROS open source community so we can make better decisions about where we allocate our time and resources. As such, we’re looking for ballpark estimates to help guide decision making, not necessarily exacting figures. This year, due to circumstances beyond our control, we’ve had to fill in some gaps in our data as explained below. We believe the numbers reported here paint a reasonable lower bound on various phenomena in the ROS community.

Our ROS package download statistics are culled from an AWStats instance running on our OSU OSL servers. In July of 2025 we moved our AWStats host at OSU OSL and upgraded AWStats ahead of its imminent deprecation. Unfortunately, this migration had two negative side effects that impacted our results for 2025. First, it caused us to lose most of our AWStats data for the month of July, 2025. Second, the upgrade did not provide a migration utility for existing log data, and our AWStats summary page for 2025 only presents data for the six months after the migration. Thankfully, we still have the raw log data for the proceeding six months (with the exception of July), and we were able to manually re-calculate the results for most metrics, albeit missing some data from the month of July.

For our Gazebo download metrics we rely upon the Apache logs available on an OSRF AWS instance and AWStats download data from the OSU OSL servers. For privacy reasons we do not retain the Apache log data in perpetuity, instead we rely on a logging buffer that periodically rolls over. In prior years this buffer was sufficient to capture well over a month’s worth of Gazebo download data. Gazebo downloads have grown significantly over the past year, and when we evaluated our logs, we found that only a little over two weeks worth of data was available. As such we decided to evaluate the download data on a two week period from January 13th, until January 27th and extrapolate those results out to the entire month.

2 posts - 1 participant

ROS Discourse General: Proposal: Add ADOPTERS to showcase ROS 2 production users

Hi ![]()

i’ve opened an issue proposing to add an ADOPTERS to the ROS documentation — a centralized, community-maintained list of organizations using ROS in production.

please have a look at the issue, and give me the feedback ![]()

- Does this seem valuable to the community?

- What fields or structure would you find most useful?

- Would your organization be willing to be listed?

If there’s interest, I’m happy to submit an initial PR to get things started. Please share your thoughts here or on the GitHub issue.

thanks,

tomoya

1 post - 1 participant

ROS Discourse General: Research grade robot recommendations in 2026

I want to test analytics software I’m developing on a wide variety of movements and ROS2 frameworks (e.g. MoveIt, Nav2, etc.) and sensor types, and I’m looking for recommendations on robots that are a good balance between low cost and a broad range of functionality. For example, I’m thinking of a combination of a Turtlebot 4 for a mobile robot and a Waveshare RoArm M3 for a robot arm with some Gen AI capabilities. I’m sure a lot of people have experience with the Turtlebot here but I’m curious what your recommendations would be in general.

By the way, I’m new here and wasn’t sure what category to post this in. Please let me know if there’s a better place for this discussion. Thanks in advance.

5 posts - 4 participants

ROS Discourse General: Service for robot description?

I was discussing this topic with a colleague and am interested in some other opinions. He was proposing using the GetParameter service to get the robot description from the robot_state_publisher node. I was suggesting we subscribe to /robot_description. We are working in a single-robot environment.

What do you prefer and why?

The way I see it, writing the service call makes the code using the robot description clearer, as you see it’s only received once, and waiting for the response is clear. In contrast, the topic subscribing code looks like you might be receiving it periodically.

On the other hand, you how have to specify the node and parameter name, so if for some reason RSP isn’t there or doesn’t have the robot_description param, and instead some other node is publishing it, it won’t work. But to be honest I’ve never had this be the case in any ros2 systems I’ve worked with.

Maybe it would be the best of both worlds if the robot_state_publisher had a /get_robot_description service? Or maybe rclpy needs some built-in helpers for making getting the robot description, or in general latched topics, cleaner? Or maybe these things already exist and I am unaware ![]()

Looking forward to hearing from others on this topic!

2 posts - 2 participants

ROS Discourse General: ROS 2 Lyrical C++ Version

As we begin the planning phase for the ROS 2 Lyrical release, the PMC is considering an upgrade to our core language requirements. Specifically, we are looking at making C++20 the default standard for the Lyrical distribution.

Why now?

The PMC has reviewed our intended Tier 1 target platforms for this cycle, and they all appear to support modern toolchains with mature C++20 implementations. These targets include:

- Ubuntu 26.04 (Resolute)

- Windows 11

- RHEL 10

- Debian 13 (Trixie)

We Need Your Feedback

While the infrastructure seems ready, the PMC wants to make sure we do not inadvertently break any critical workflows or orphan embedded environments that might be constrained by older compilers.

We would like to hear from you if:

- You are targeting an LTS embedded platform or an RTOS that lacks a C++20-compliant compiler.

- You maintain a core package that would face significant architectural hurdles by incrementing the standard.

- You have specific concerns regarding binary compatibility or cross-compilation with existing C++17 libraries.

The goal is to move the ecosystem forward without leaving anyone behind. If you anticipate any friction, please share your thoughts below.

2 posts - 1 participant

ROS Industrial: ROS 2 in Industry: Key Takeaways from the ROS-Industrial Conference 2025

The 13th ROS-Industrial Europe Conference 2025 took place on 17–18 November 2025 in Strasbourg, co-located with ROSCon FR&DE. The event brought together industrial practitioners, researchers, and technology providers to share practical experience with deploying ROS 2 in production environments, discussing both proven approaches and remaining challenges.

Hosted at the CCI Campus Alsace – Site de Strasbourg, the program covered robotics market insights, vendor perspectives, and technical topics such as driver development and real-time control. Further sessions addressed humanoid safety, modular application frameworks, and industrial expectations regarding determinism and long-term maintainability. Updates from the different regional ROS-Industrial consortia provided a broader international perspective.

The event concluded with a hands-on company visit to ENGLAB, allowing participants to see robotics solutions in action beyond the conference hall.

Event Page with links to the slides and presentations video here

Day 1 Highlights : From Market Momentum to “ROS 2 Going Industrial”

Werner Kraus opened the conference with an introduction to Fraunhofer IPA and a global robotics market overview. He highlighted strong growth trends, particularly in medical and humanoid robotics, and emphasized that safety in humanoid systems remains a critical research and engineering frontier.

Felix Exner from Universal Robots presented ongoing development of ROS interfaces for robot controllers, including motion-primitive-based approaches. He addressed a recurring industry challenge: maintaining a stable ROS ecosystem across multiple distributions while balancing documentation quality, development agility, and long-term support strategies.

Robert Wilbrandt from the FZI Research Center for Information Technology shared insights into RSI integration, asynchronous control strategies, and the practical integration challenges that arise when transitioning research prototypes into industrial systems. His talk also highlighted key software-architecture considerations such as driver lifecycles, memory management, and allocation tracking—turning “robustness” into measurable engineering practices.

Alexander Mühlens from igus GmbH showcased several ROS-powered innovations and real-world deployments, with particular focus on the RBTX marketplace and the value of ecosystems in reducing cost, risk, and complexity for robotics adoption. His examples demonstrated how accessible, composable solutions can accelerate industrial uptake.

Adolfo Suarez Roos from IRT Jules Verne discussed Yaskawa drivers and industrial applications ranging from medical finishing processes to offshore welding automation. A key message was that successful deployments depend on tight integration decisions—including controller capabilities, communication frequency, and compatibility constraints—tailored to the realities of the shop floor.

Lukasz Pietrasik from Intrinsic presented a practical approach to integrating ROS with broader AI and software platforms. Topics included developer workflows, digital-twin environments, behavior-tree-based task composition, and bridging ROS data and services into higher-level orchestration platforms.

Afternoon Focus : Safety, Resilience, and Industrial Expectations

Florian Weißhardt from Synapticon GmbH addressed the unique safety challenges of humanoid robots, where unpredictability, balance loss, and autonomy make traditional “safe state” concepts insufficient. His session reinforced a central theme of the day: as robots move into unstructured environments, safety becomes a system-level design challenge rather than a single-component feature.

Florian Gramß from Siemens AG explored the tension between traditional deterministic automation and the flexibility offered by ROS-based systems. He advocated for hybrid architectures—deterministic where required, flexible where possible—as a realistic path forward for modern industrial automation.

Riddhesh Pradeep More presented his work on semantic discovery and rich descriptive models for reusable ROS software components, demonstrating how knowledge graphs and vector-based semantic search can significantly improve the identification, understanding, and reuse of ROS packages across domains such as navigation, perception, SLAM, and manipulation.

Dennis Borger showcased applied ROS 2 research projects including robotic bin-picking and automated post-processing, highlighting how modular architectures, hybrid vision approaches, and AI-supported workflows enable flexible automation solutions for small-batch and customized industrial production scenarios.

Denis Stogl and Nikola Banović from b-robotized GmbH shared practical experiences in bringing ROS 2 into real industrial environments, emphasizing the role of ros2_control, hardware abstraction, diagnostics, and seamless integration with industrial communication protocols such as EtherCAT, CANOpen, and Modbus to achieve production-ready robotic systems.

The first day concluded with a Gala Dinner, where informal discussions and networking often proved as valuable as the scheduled presentations.

Day 2 Highlights : Consortium Alignment and Advanced Applications

Consortium Updates Across Regions

The second day began with updates from across the global ROS-Industrial network:

• Vishnuprasad Prachandabhanu and Yasmine Makkaoui on ROS-Industrial Europe initiatives

• Maria Vergo and Glenn Tan on Asia-Pacific ecosystem orchestration, sandboxes, and large-scale deployments

• Paul Evans from the Southwest Research Institute on ROS-Industrial Americas roadmap priorities, technical progress, and improvements in usability and tooling

Louis-Romain Joly from SNCF introduced nav4rail, a navigation stack tailored specifically for railway maintenance robots. His key insight was that in constrained domains—such as effectively one-dimensional rail movement—simpler, model-driven solutions can outperform general-purpose navigation frameworks in both clarity and engineering efficiency.

Mario Prats from PickNik Robotics closed the conference with advancements in mobile manipulation workflows and the continued evolution of MoveIt toward professional-grade tooling, highlighting behaviour-tree composition, real-time control capabilities, and an AI-oriented roadmap.

Closing Takeaway : Industrial ROS Maturing Through Engineering Reality

The conference confirmed that ROS 2 is steadily gaining ground in real industrial environments. A wide range of practical use cases, improved interoperability through ros2_control and fieldbus integration, and increasing adoption of behavior-tree-based architectures demonstrate clear technical progress.

At the same time, challenges remain, particularly in documentation quality and real-time performance. Safety, AI integration, and driver development continue to shape the technical agenda, while expectations for new collaborative initiatives such as a potential ROSin 2.0 underline the need for sustained ecosystem support.

ROS Discourse General: [Call for Papers] RoSE’26 (Robotics Software Engineering) @ ICRA Vienna -- due March 8

Hi all,

Software is the invisible thread that weaves the fabric of robotics: it turns sensors into perception, models into decisions, and hardware into reliable behavior in the real world. As our systems scale from demos to deployment, robust engineering practices: architecture, testing, tooling, debugging, benchmarking, and reproducibility, often determine success.

With that in mind, we’re inviting submissions to

RoSE’26 (Robotics Software Engineering) Workshop @ ICRA Vienna

![]() Submission deadline: March 8 (20 days to go)

Submission deadline: March 8 (20 days to go)

What we’re looking for?

We welcome contributions that share actionable software engineering insights for robotics, including (but not limited to):

- ROS/ROS 2 system & package architecture patterns (and lessons learned)

- Testing & quality: CI, simulation + HIL, regression testing, reproducibility

- Tooling: build/release workflows, dependency management, static analysis

- Runtime robustness: logging, introspection, monitoring, debugging, recovery

- Benchmarking & evaluation practices for robotics software

- Deployment at scale: updates, configuration, fleet/edge deployment practices

- Maintenance realities: migrations, long-lived systems, technical debt management

If you’ve built something others could reuse, or learned something the hard way, RoSE is a great venue to share it.

Examples of ROS-focused RoSE papers from previous editions

To give a sense of the kinds of ROS/ROS 2 topics that have fit well at RoSE:

-

Energy Efficiency of ROS Nodes in Different Languages: Publisher-Subscriber Case Studies (RoSE’24)

https://rose-workshops.github.io/files/rose2024/papers/rose_2024_1.pdf -

Towards Automated Verification of ROS 2 Systems – A Model-Based Approach (RoSE’24)

https://rose-workshops.github.io/files/rose2024/papers/rose_2024_7.pdf -

Getting started with ROS2 development: a case study of software development challenges (RoSE’23)

https://rose-workshops.github.io/files/rose2023/papers/RoSE2023_paper_1.pdf -

ROMoSu: Flexible Runtime Monitoring Support for ROS-based Applications (RoSE’23)

https://rose-workshops.github.io/files/rose2023/papers/RoSE2023_paper_3.pdf

Submission details and workshop info

Website (CFP + instructions):

Questions about fit or format? Feel free to reply here.

We also appreciate it if you share with your peers ![]()

Hope to see many ROS-flavored software engineering lessons represented at RoSE’26!

–

Ricardo Caldas

on behalf of the RoSE’26 Organizing Committee

1 post - 1 participant

ROS Discourse General: Cornea: Image Segmentation Skills from the Telekinesis Agentic Skill Library

Introducing Cornea: Image Segmentation Skills from the Telekinesis Agentic Skill library.

Cornea is a module in the Telekinesis Agentic Skill Library containing skills for 2D image segmentation: https://docs.telekinesis.ai/

It provides segmentation capabilities using classical computer vision techniques and deep learning models, allowing developers to extract structured visual information from images for robotics applications.

What Does Cornea Provide?

- Color-based segmentation: RGB, HSV, LAB, YCrCb

- Region-based segmentation: Focus region, Watershed, Flood fill

- Deep learning segmentation: BiRefNet (foreground), SAM

- Graph-based segmentation: GrabCut

- Superpixel segmentation: Felzenszwalb, SLIC

- Filtering: Filter by area, color, mask

- Thresholding: Global threshold, Otsu, Local, Yen, Adaptive, Laplacian-based

When to Use Cornea?

Use Cornea for robotics applications that require pixel-level understanding of images, such as:

- Vision-guided pick-and-place pipelines

- Palletizing and bin organization

- Object isolation for manipulation and grasp planning

- Obstacle detection in camera-based navigation

- Scene understanding for Physical AI agents

2 posts - 1 participant

ROS Discourse General: Release] LinkForge v1.2.3: 100% Type Safety, Parser Hardening & ROS-Agnostic Assets

Hi everyone! ![]()

I’m excited to announce the release of LinkForge v1.2.3 — Professional URDF & XACRO Bridge for Blender.

This release marks a major stability milestone, achieving 100% static type safety across the Blender codebase and introducing significant robustness improvements to the core parser.

Key Highlights

ROS-Agnostic Asset Resolution: We’ve introduced a hybrid package resolver that allows you to import complex robot descriptions (with

ROS-Agnostic Asset Resolution: We’ve introduced a hybrid package resolver that allows you to import complex robot descriptions (with package://URIs) on any OS, without needing a local ROS installation. This effectively bridges the gap between design teams (on Windows/macOS) and engineering teams (on Linux). Parser Hardening: The import logic is now much more resilient to edge cases, malformed XML, and unusual file paths.

Parser Hardening: The import logic is now much more resilient to edge cases, malformed XML, and unusual file paths. 100% Type Safety: A complete refactor of the Blender integration ensures maximum stability and fewer runtime errors.

100% Type Safety: A complete refactor of the Blender integration ensures maximum stability and fewer runtime errors. DAE Support Restored: Full support for Collada meshes has been restored for legacy robot compatibility.

DAE Support Restored: Full support for Collada meshes has been restored for legacy robot compatibility.

LinkForge enables a true “Sim-Ready” workflow: Model in Blender, configure physics/sensors/ros2_control visually, and export valid URDF/XACRO code directly.

![]() Links:

Links:

- GitHub/Download: arounamounchili/linkforge

- Documentation: Read the Docs

- Get it on Blender Extensions: linkforge-blender

Happy forging! ![]()

8 posts - 4 participants

ROS Discourse General: Proposal: Reproducible actuator-boundary safety (SSC + conformance harness)

Hi all — I’m looking for feedback on a design question around actuator-boundary safety in ROS-based systems.

Once a planner (or LLM-backed stack) can issue actuator commands, failures become motion. Most safety work in ROS focuses on higher layers (planning, perception, behavior trees), but there’s less shared infrastructure around deterministic enforcement at the actuator interface itself.

I’m prototyping a small hardware interposer plus a draft “Safety Contract” spec (SSC v1.1) with three components:

-

A machine-readable contract defining caps (velocity / acceleration / effort), modes (development vs field), and stop semantics

-

A conformance harness (including malformed traffic handling + fuzzing / anti-wedge tests)

-

“Evidence packs” (machine-readable logs with wedge counts, latency distributions, and verifier tooling)

The goal is narrow:

Not “this makes robots safe.”

But: if someone claims actuator-boundary enforcement works, there should be a reproducible way to test and audit that claim.

Some concrete design questions I’m unsure about:

• Does ROS 2 currently have a standard place where actuator-boundary invariants should live?

• Should this layer sit at the driver level, as a node wrapper, or outside ROS entirely?

• What would make a conformance harness credible to you?

• Are there prior art efforts I should be aware of?

I’m happy to share more technical detail if useful. Mostly interested in whether this layer is actually leverageful or if I’m solving the wrong problem.

4 posts - 1 participant

ROS Discourse General: Ament (and cmake) understanding

I always add in existing CmakeLists new packages, but I just follow the previous structure of the code and adding something to it is not that hard, but it is always error and trial.

Reading official docs is of course useful, but it doesn’t stick. I forget it immediately.

Is this just a skill issue, and I should invest more time in Cmake and “building”? How important it is to know every line of a CmakeList? Does it make sense concentrating on it specifically?

Thank you)

5 posts - 3 participants

ROS Discourse General: Piper Arm Kinematics Implementation

Piper Arm Kinematics Implementation

Abstract

This chapter implements the forward kinematics (FK) and Jacobian-based inverse kinematics (IK) for the AgileX PIPER robotic arm using the Eigen linear algebra library, as well as the implementation of custom interactive markers via interactive_marker_utils.

Tags

Forward Kinematics, Jacobian-based Inverse Kinematics, RVIZ Simulation, Robotic Arm DH Parameters, Interactive Markers, AgileX PIPER

Function Demonstration

Code Repository

GitHub Link: https://github.com/agilexrobotics/Agilex-College.git

1. Preparations Before Use

Reference Videos:

1.1 Hardware Preparation

- AgileX Robotics Piper robotic arm

1.2 Software Environment Configuration

- For Piper arm driver deployment, refer to: https://github.com/agilexrobotics/piper_sdk/blob/1_0_0_beta/README(ZH).MD

- For Piper arm ROS control node deployment, refer to: https://github.com/agilexrobotics/piper_ros/blob/noetic/README.MD

- Install the Eigen linear algebra library:

sudo apt install libeigen3-dev

1.3 Prepare DH Parameters and Joint Limits for AgileX PIPER

The modified DH parameter table and joint limits of the PIPER arm can be found in the AgileX PIPER user manual:

2. Forward Kinematics (FK) Calculation

The FK calculation process essentially converts angle values of each joint into the pose of a specific joint of the robotic arm in 3D space. This chapter takes joint6 (the last rotary joint of the arm) as an example.

2.1 Prepare DH Parameters

- Build the FK calculation program based on the PIPER DH parameter table. From the modified DH parameter table of AgileX PIPER in Section 1.3, we obtain:

// Modified DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{0, 0, 0.123, 0}, // Joint 1

{-M_PI/2, 0, 0, -172.22/180*M_PI}, // Joint 2

{0, 0.28503, 0, -102.78/180*M_PI}, // Joint 3

{M_PI/2, -0.021984, 0.25075, 0}, // Joint 4

{-M_PI/2, 0, 0, 0}, // Joint 5

{M_PI/2, 0, 0.091, 0} // Joint 6

};

For conversion to Standard DH parameters, refer to the following rules:

Standard DH ↔ Modified DH Conversion Rules:

-

Standard DH → Modified DH:

αᵢ₋₁ (Standard) = αᵢ (Modified)

aᵢ₋₁ (Standard) = aᵢ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified) -

Modified DH → Standard DH:

αᵢ (Standard) = αᵢ₊₁ (Modified)

aᵢ (Standard) = aᵢ₊₁ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified)

The converted Standard DH parameters are:

// Standard DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{-M_PI/2, 0, 0.123, 0}, // Joint 1

{0, 0.28503, 0, -172.22/180*M_PI}, // Joint 2

{M_PI/2, -0.021984, 0, -102.78/180*M_PI}, // Joint 3

{-M_PI/2, 0, 0.25075, 0}, // Joint 4

{M_PI/2, 0, 0, 0}, // Joint 5

{0, 0, 0.091, 0} // Joint 6

};

- Prepare DH Transformation Matrices

- Modified DH Transformation Matrix:

- Rewrite the modified DH transformation matrix using Eigen:

T << cos(theta), -sin(theta), 0, a,

sin(theta)*cos(alpha), cos(theta)*cos(alpha), -sin(alpha), -sin(alpha)*d,

sin(theta)*sin(alpha), cos(theta)*sin(alpha), cos(alpha), cos(alpha)*d,

0, 0, 0, 1;

- Standard DH Transformation Matrix:

- Rewrite the standard DH transformation matrix using Eigen:

T << cos(theta), -sin(theta)*cos(alpha), sin(theta)*sin(alpha), a*cos(theta),

sin(theta), cos(theta)*cos(alpha), -cos(theta)*sin(alpha), a*sin(theta),

0, sin(alpha), cos(alpha), d,

0, 0, 0, 1;

- Implement the core function

computeFK()for FK calculation. See the complete code in the repository: https://github.com/agilexrobotics/Agilex-College.git

Eigen::Matrix4d computeFK(const std::vector<double>& joint_values) {

// Check if the number of input joint values is sufficient (at least 6)

if (joint_values.size() < 6) {

throw std::runtime_error("Piper arm requires at least 6 joint values for FK");

}

// Initialize identity matrix as the initial transformation

Eigen::Matrix4d T = Eigen::Matrix4d::Identity();

// For each joint:

// Calculate actual joint angle = input value + offset

// Get fixed parameter d

// Calculate the transformation matrix of the current joint and accumulate to the total transformation

for (size_t i = 0; i < 6; ++i) {

double theta = joint_values[i] + dh_params_[i][3]; // θ = joint_value + θ_offset

double d = dh_params_[i][2]; // d = fixed d value (for rotary joints)

T *= computeTransform(

dh_params_[i][0], // alpha

dh_params_[i][1], // a

d, // d

theta // theta

);

}

// Return the final transformation matrix

return T;

}

2.2 Verify FK Calculation Accuracy

- Launch the FK verification program:

ros2 launch piper_kinematics test_fk.launch.py

- Launch the RVIZ simulation program, enable TF tree display, and check if the pose of

link6_from_fk(the arm end-effector calculated by FK) coincides with the originallink6(calculated by joint_state_publisher):

ros2 launch piper_description display_piper_with_joint_state_pub_gui.launch.py

High coincidence is observed, and the error between link6_from_fk and link6 is basically within four decimal places.

3. Inverse Kinematics (IK) Calculation

The IK calculation process essentially determines the position of each joint of the robotic arm required to move the arm’s end-effector to a given target point.

3.1 Confirm Joint Limits

- Joint limits of the PIPER arm must be defined to ensure the IK solution path does not exceed physical constraints (preventing arm damage or safety hazards).

- From Section 1.3, the joint limits of the PIPER arm are:

- The joint limit matrix is defined as:

std::vector<std::pair<double, double>> limits = {

{-154/180*M_PI, 154/180*M_PI}, // Joint 1

{0, 195/180*M_PI}, // Joint 2

{-175/180*M_PI, 0}, // Joint 3

{-102/180*M_PI, 102/180*M_PI}, // Joint 4

{-75/180*M_PI, 75/180*M_PI}, // Joint 5

{-120/180*M_PI, 120/180*M_PI} // Joint 6

};

3.2 Step-by-Step Implementation of Jacobian Matrix Method for IK

Solution Process:

- Calculate Error e:

Difference between current pose and target pose (6-dimensional vector: 3 for position + 3 for orientation). - Is Error e below tolerance?

- Yes: Return current θ as the solution.

- No: Proceed to iterative optimization.

- Calculate Jacobian Matrix J: 6×6 matrix.

- Calculate Damped Pseudoinverse:

J⁺ = Jᵀ(JJᵀ + λ²I)⁻¹

λ is the damping coefficient (avoids numerical instability in singular configurations).

5. Calculate Joint Angle Increment:

Δθ = J⁺e

Adjust joint angles using error e and pseudoinverse.

6. Update Joint Angles:

θ = θ + Δθ

Apply adjustment to current joint angles.

7. Apply Joint Limits.

8. Normalize Joint Angles.

9. Reach Maximum Iterations?

- No: Return to Step 2 for further iteration.

- Yes: Throw non-convergence error.

Core Function computeIK():

std::vector<double> computeIK(const std::vector<double>& initial_guess,

const Eigen::Matrix4d& target_pose,

bool verbose = false,

Eigen::VectorXd* final_error = nullptr) {

// Initialize with initial guess pose

if (initial_guess.size() < 6) {

throw std::runtime_error("Initial guess must have at least 6 joint values");

}

std::vector<double> joint_values = initial_guess;

Eigen::Matrix4d current_pose;

Eigen::VectorXd error(6);

bool success = false;

// Start iterative calculation

for (int iter = 0; iter < max_iterations_; ++iter) {

// Calculate FK for initial state to get position and orientation

current_pose = fk_.computeFK(joint_values);

// Calculate error between initial state and target pose

error = computePoseError(current_pose, target_pose);

if (verbose) {

std::cout << "Iteration " << iter << ": error norm = " << error.norm()

<< " (pos: " << error.head<3>().norm()

<< ", orient: " << error.tail<3>().norm() << ")\n";

}

// Check if error is below tolerance (separate for position and orientation)

if (error.head<3>().norm() < position_tolerance_ &&

error.tail<3>().norm() < orientation_tolerance_) {

success = true;

break;

}

// Calculate Jacobian matrix (analytical by default)

Eigen::MatrixXd J = use_analytical_jacobian_ ?

computeAnalyticalJacobian(joint_values, current_pose) :

computeNumericalJacobian(joint_values);

// Use Levenberg-Marquardt (damped least squares)

// Δθ = Jᵀ(JJᵀ + λ²I)⁻¹e

// θ_new = θ + Δθ

Eigen::MatrixXd Jt = J.transpose();

Eigen::MatrixXd JJt = J * Jt;

// lambda_: damping coefficient (default 0.1) to avoid numerical instability in singular configurations

JJt.diagonal().array() += lambda_ * lambda_;

Eigen::VectorXd delta_theta = Jt * JJt.ldlt().solve(error);

// Update joint angles

for (int i = 0; i < 6; ++i) {

// Apply adjustment to current joint angle

double new_value = joint_values[i] + delta_theta(i);

// Ensure updated θ is within physical joint limits

joint_values[i] = std::clamp(new_value, joint_limits_[i].first, joint_limits_[i].second);

}

// Normalize joint angles to [-π, π] (avoid unnecessary multi-turn rotation)

normalizeJointAngles(joint_values);

}

// Throw exception if no solution is found within max iterations (100)

if (!success) {

throw std::runtime_error("IK did not converge within maximum iterations");

}

// Calculate final error (if required)

if (final_error != nullptr) {

current_pose = fk_.computeFK(joint_values);

*final_error = computePoseError(current_pose, target_pose);

}

return joint_values;

}

3.3 Publish 3D Target Points for the Arm Using Interactive Markers

- Install ROS2 dependency packages:

sudo apt install ros-${ROS_DISTRO}-interactive-markers ros-${ROS_DISTRO}-tf2-ros

- Launch

interactive_marker_utilsto publish 3D target points:

ros2 launch interactive_marker_utils marker.launch.py

- Launch RVIZ2 to observe the marker:

- Drag the marker and use

ros2 topic echoto verify if the published target point updates:

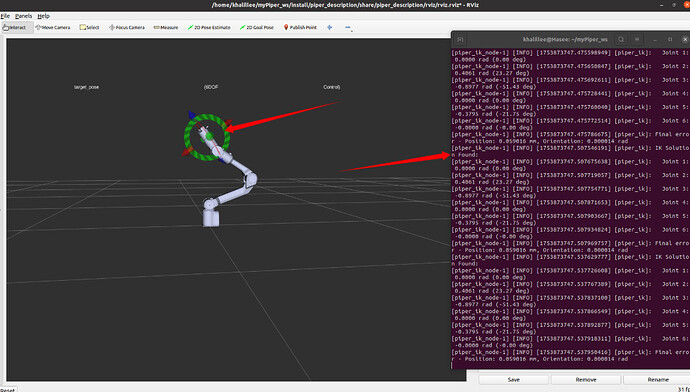

3.4 Verify IK Correctness in RVIZ via Interactive Markers

- Launch the AgileX PIPER RVIZ simulation demo (the model will not display correctly without

joint_state_publisher):

ros2 launch piper_description display_piper.launch.py

- Launch the IK node and

interactive_markernode (in the same launch file). The arm will display correctly after successful launch:

ros2 launch piper_kinematics piper_ik.launch.py

- Control the arm for IK calculation using

interactive_marker:

- Drag the

interactive_markerto see the IK solver calculate joint angles in real time:

- If the

interactive_markeris dragged to an unsolvable position, an exception will be thrown:

4. Verify IK on the Physical PIPER Arm

- First, launch the script for CAN communication with the PIPER arm:

cd piper_ros

./find_all_can_port.sh

./can_activate.sh

- Launch the physical PIPER control node:

ros2 launch piper my_start_single_piper_rviz.launch.py

- Launch the IK node and

interactive_markernode (in the same launch file). The arm will move to the HOME position:

ros2 launch piper_kinematics piper_ik.launch.py

- Drag the

interactive_markerand observe the movement of the physical PIPER arm.

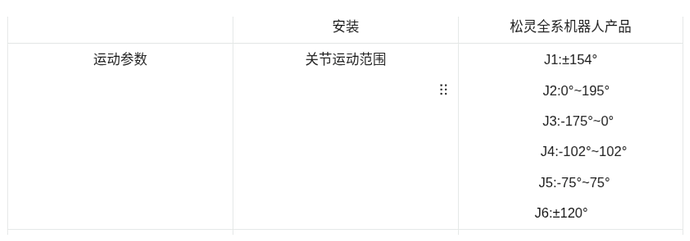

1 post - 1 participant