!

| |

Read and visualize your robot sensors

Description: Read and visualize your robot sensorsTutorial Level: BEGINNER

Next Tutorial: Command you robot with simple motion commands

This tutorial will cover the steps needed in order to read and visualize your robot's sensors.

Contents

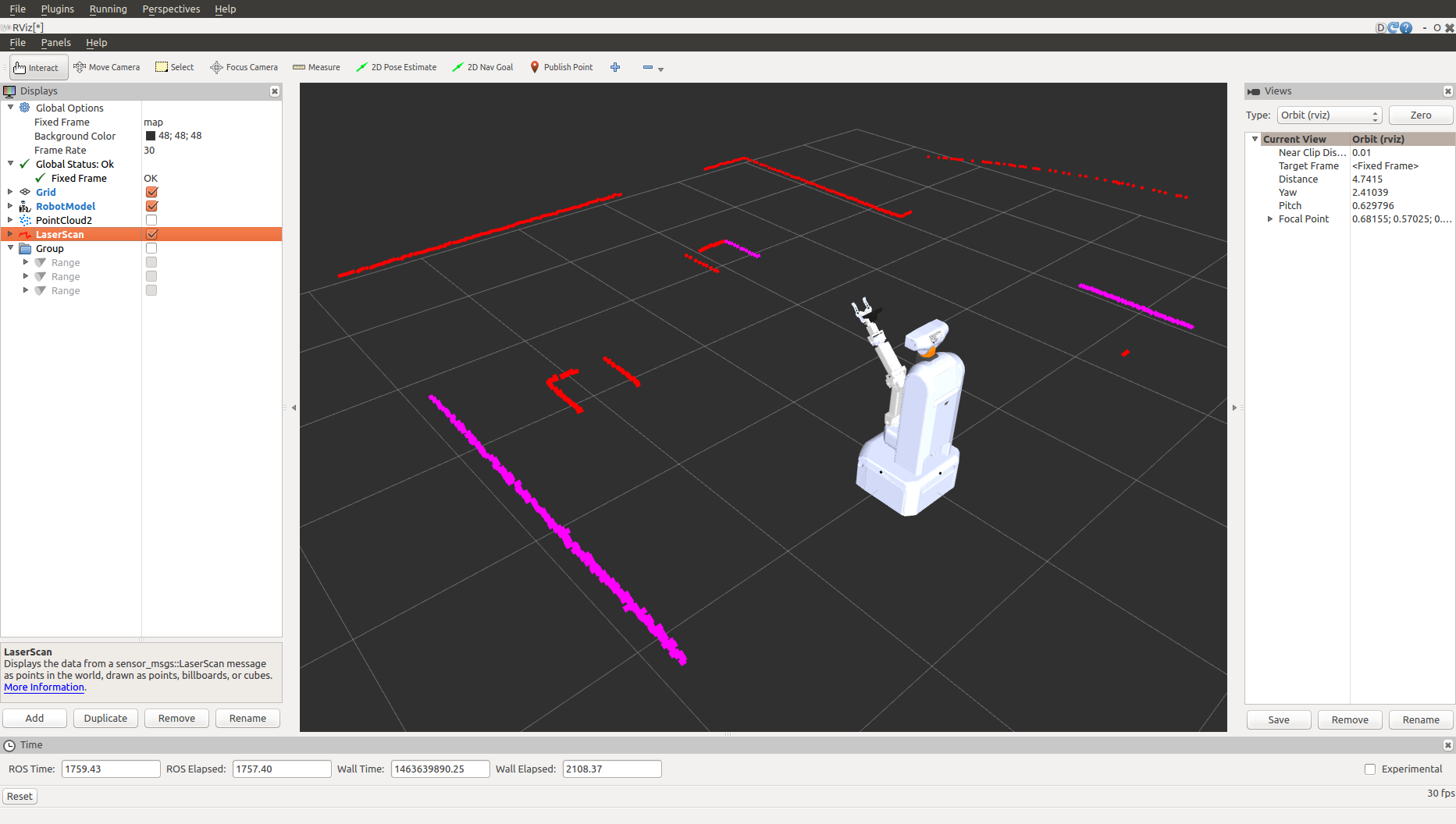

Laser scanner

The laser scanner driver node is hokuyo_node. The data is published through a sensor_msgs/LaserScan message under the topic /scan.

To view the camera stream from the rqt rqt_rviz plugin, select from the rqt upper menu:

Plugins -> Visualization -> Rviz

To add the laserscan visualization to Rviz, press the Add button on the bottom of the left Rviz displays list window, select LaserScan under rviz folder, and press OK on the bottom of the window (Add -> LaserScan -> OK).

Now, you will be able to see that LaserScan property was added to the left Rviz displays list window. Press the small arrow on the left of the LaserScan property to expand its properties. For Topic property select /scan. Notice: you will not be able to see the LaserScan visualization in Rviz, unless the robot is in front of an object.

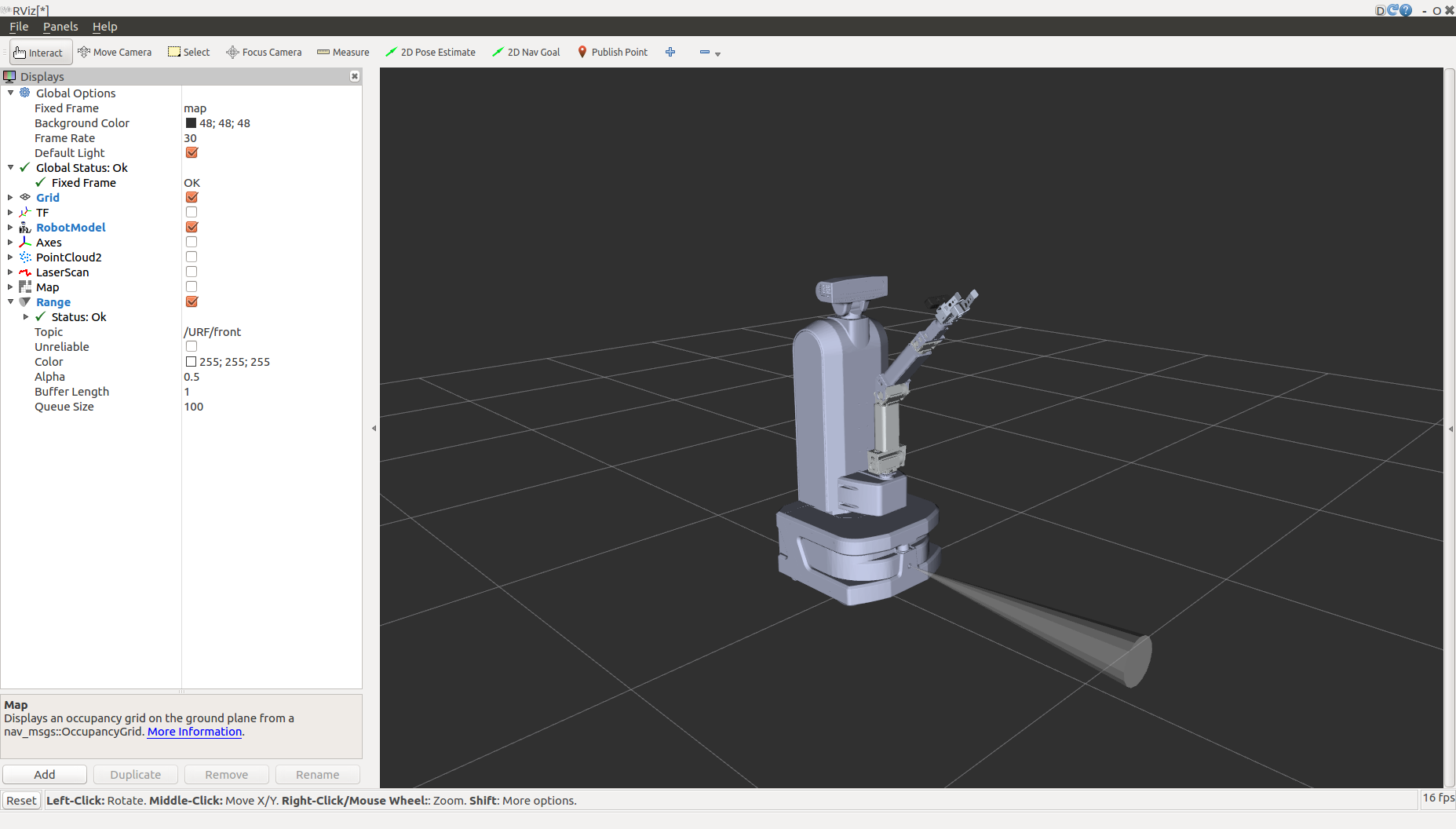

Ultrasonic Range Finders (URF)

The range finders data is published through a sensor_msgs/Range message under the topic /URF/<sensor_name>.

ARMadillo2 has range finder mounted on the front of the mobile base and the topics are: and /URF/front accordingly.

To view the camera stream from the rqt rqt_rviz plugin, select from the rqt upper menu:

Plugins -> Visualization -> Rviz

To add the URF visualization to Rviz, press the Add button on the bottom of the left Rviz displays list window, select Range under rviz folder, and press OK on the bottom of the window (Add -> Range -> OK).

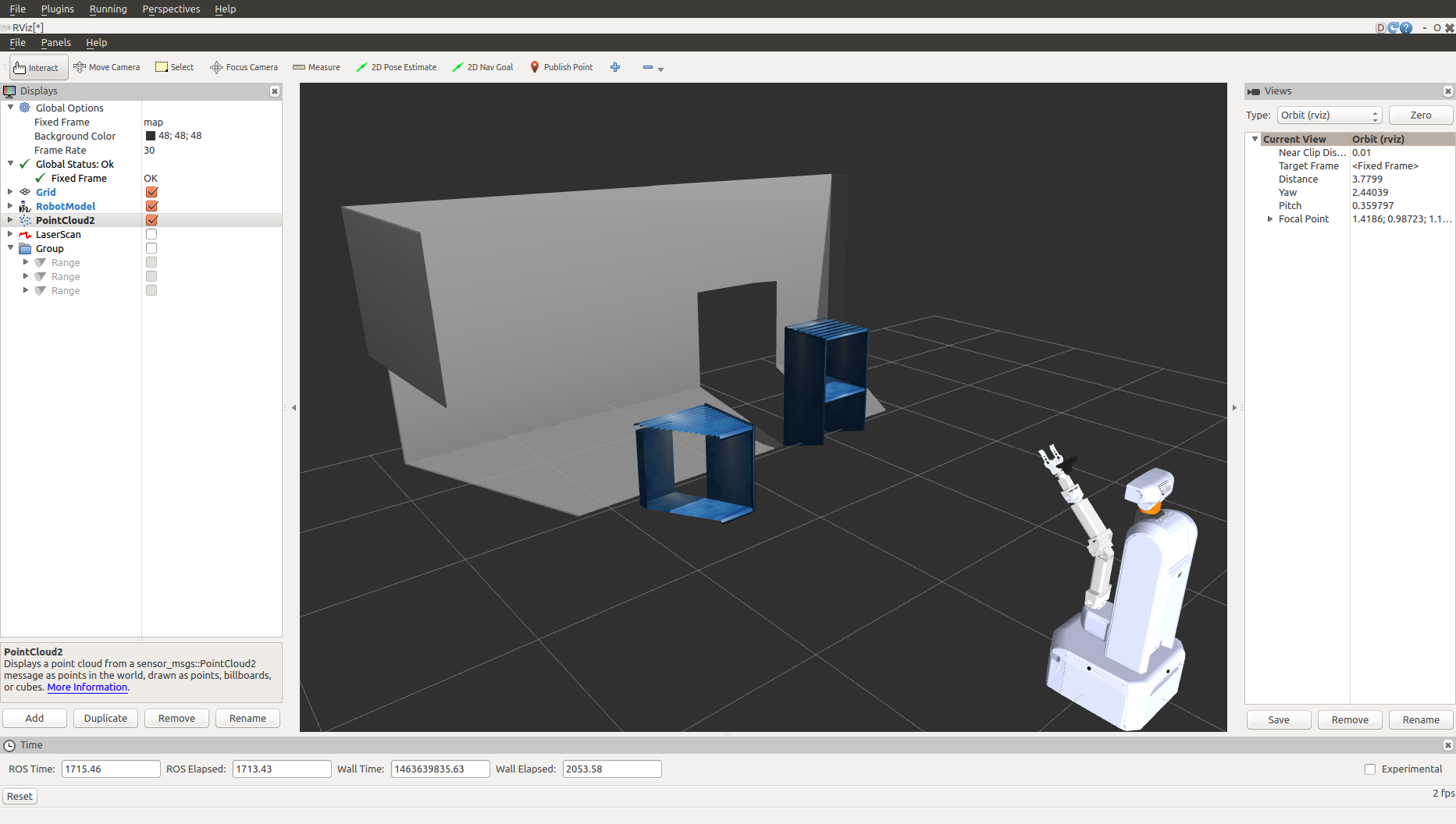

RGB-D depth cameras

Kinect 2

We use the following collection of tools and libraries for a ROS Interface to the Kinect One (Kinect v2):

https://github.com/code-iai/iai_kinect2

See https://github.com/code-iai/iai_kinect2/tree/master/kinect2_bridge for details on topics names and types.

SoftKinetic

we use the DepthSenseSDK for a ROS Interface to the Softkinetic

To add the depth camera visualization to Rviz, press the Add button on the bottom of the left Rviz displays list window, select PointCloud2 under rviz folder, and press OK on the bottom of the window (Add -> PointCloud2 -> OK). then in the Displays menu, press on DepthCloud -> Depth Map Topic and choose the camera you want to use.

In case you wish to use both Kinnect2 camera and Softkinetic camera at the same time in the real world, run armadillo2 launch file with kinect:=true. After a few second, in another terminal run this launch file, to use the Softkinetic camera also.

$ roslaunch softkinetic_camera softkinetic_camera_ds325.launch

GPS

The GPS data is published through a sensor_msgs/NavSatFix message under the topic /GPS/fix.

Inertial Measurement Unit (IMU)

The IMU data is published through a sensor_msgs/Imu message under the topic /IMU/data.

The Magnetometer data is published through a geometry_msgs/Vector3Stamped message under the topic /IMU/magnetic.