| Note: This tutorial assumes that you have completed the previous tutorials: Training. |

| |

How to run the tracker/detector of BLORT

Description: This tutorial will describe how to launch BLORT to detect and track.Tutorial Level: INTERMEDIATE

Next Tutorial: Tune BLORT

Introduction

In this step we are going to use two nodes of BLORT, namely

- tracker_node

- detector_node

They together are forming the actual tracker/detector system.

tracker_node

Does edge-based tracking after a close-enough pose estimate was given. It's purpose is twofold, provides faster speed than the detector_node and refines the rough pose estimate of it.

detector_node

The big brother: slow and big but the little brother would never get to anywhere without it. The role of the detector_node is to provide an approximate pose estimation of the learned object's pose, if it's in the field of view. It's using feature based methods to be robust.

Configuration

First check that you have the model of the object you’d like to detect and track. Open $(find blort_ros)/bin/tracking.ini and look for these lines:

[Files] Model = Pringles.ply SiftModel = Pringles.sift

Replace Pringles with however your model is called. Make sure that you have both files at the right place. (If you didn’t do anything with them after the training, they should be ok.) Pringles.sift should be located at $(find blort_ros)/Resources/sift/ and Pringles.ply along with texture files (.jpg) at $(find blort_ros)/Resources/ply/.

Usage

In this tutorial a bag file included in $(find blort_ros)/Resources/bags will be used. First of all, play the bag file as follows:

roscd blort_ros cd Resources/bags rosbag play pringles_single_stereo_image.bag --loop

The bag file contains the topics /stereo/left/camera_info, /stereo/left/image, /stereo/right/camera_info and /stereo/right/image.

To start the detector and tracker nodes execute: $ roslaunch blort_ros tracking.launch

<launch>

<!-- Tracker node -->

<node pkg="blort_ros" type="gltracker_node" name="blort_tracker" args="$(find blort_ros)">

<param name="launch_mode" value="tracking" />

<remap from="/blort_camera_info" to="/stereo/left/camera_info" />

<remap from="/blort_image" to="/stereo/left/image" />

</node>

<!-- Detector node -->

<node pkg="blort_ros" type="gldetector_node" name="blort_detector" args="$(find blort_ros)">

<remap from="/blort_camera_info" to="/stereo/left/camera_info" />

</node>

</launch>To have some control and feedback execute:

$ roslaunch blort_ros view_control.launch

<launch>

<node pkg="dynamic_reconfigure" type="reconfigure_gui" name="reconfigure_gui" />

<!-- Visual feedback -->

<node pkg="image_view" type="image_view" name="image_view_tracker">

<remap from="/image" to="/blort_tracker/image_result" />

</node>

<!-- Visual feedback -->

<node pkg="image_view" type="image_view" name="image_view_detector">

<remap from="/image" to="/blort_detector/image_result" />

</node>

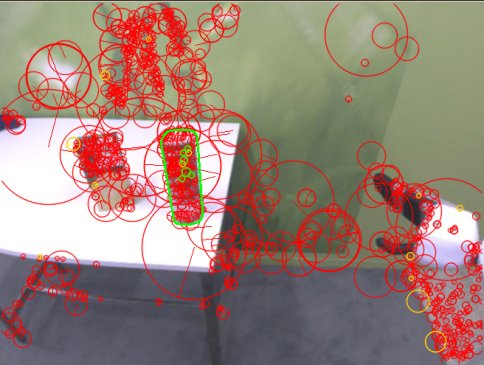

</launch>You should see something like these:

Result of the detector_node: (reds are detected sifts, yellows are matched sifts, greens are matched and inlier sifts)

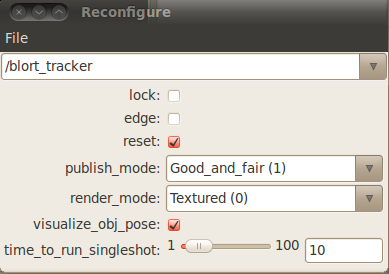

Result of the tracker_node: The tracker will publish the pose if it’s confidence reaches a certain threshold. This publishing threshold can be modified using the reconfigure gui’s publish_mode field. Hitting “reset” on the gui will push the tracker back to recovery state (it will call the detector for a new pose to initialize the tracking).

![]()

reconfigure_gui from dynamic_reconfigure:

Videos

Tracking a Pringles:

Tracking the juicebox: