Only released in EOL distros:

Package Summary

cob_people_perception

- Author: Maintained by Richard Bormann

- License: BSD,Fraunhofer IPA

- Source: git https://github.com/ipa320/cob_people_perception.git (branch: release_electric)

Package Summary

Contains several packages for detecting and identifying faces, and detecting and tracking humans with RGB-D sensors or laser scanners. Detects persons through head and face detection and identifies the detected faces. The results are tracked over time to increase confidence. The system is optimized to be used with a Microsoft Kinect or Asus Xtion Pro Live sensor but could also handle different sensors as long as depth and color images are provided.

- Maintainer: Richard Bormann <richard.bormann AT ipa.fraunhofer DOT de>

- Author: Richard Bormann <richard.bormann AT ipa.fraunhofer DOT de>, Thomas Zwölfer, Olha Meyer

- License: LGPL

- Source: git https://github.com/ipa-rmb/cob_people_perception.git (branch: hydro_dev)

Package Summary

Contains several packages for detecting and identifying faces, and detecting and tracking humans with RGB-D sensors or laser scanners. Detects persons through head and face detection and identifies the detected faces. The results are tracked over time to increase confidence. The system is optimized to be used with a Microsoft Kinect or Asus Xtion Pro Live sensor but could also handle different sensors as long as depth and color images are provided.

- Maintainer status: developed

- Maintainer: Richard Bormann <richard.bormann AT ipa.fraunhofer DOT de>

- Author: Richard Bormann <richard.bormann AT ipa.fraunhofer DOT de>, Thomas Zwölfer, Olha Meyer

- License: LGPL

- Source: git https://github.com/ipa-rmb/cob_people_perception.git (branch: indigo_dev)

Contents

Introduction

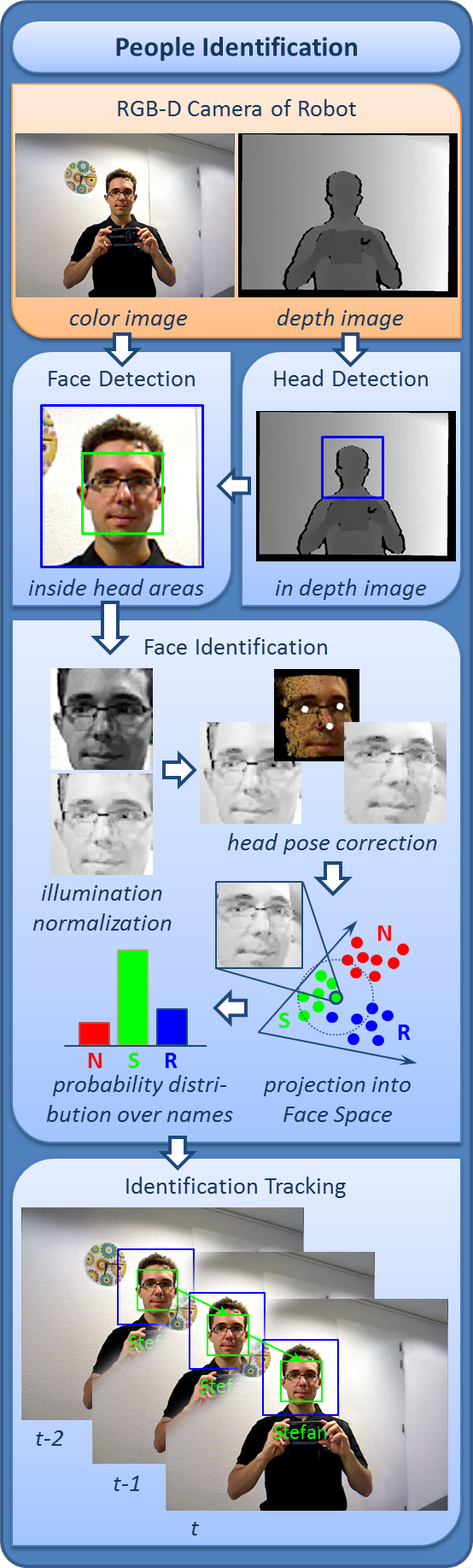

This package contains software for detecting heads and faces and recognizing people. Head and face detection utilize the Viola-Jones classifier on depth or color images, respectively. Face recognition of previously learned people is based on either Eigenfaces or Fisherfaces. The recognition method can be configured and trained with a simple interface as explained in the next section.

Quick Start

To use people detection and recognition clone the repository to your disc and build it. Remark: the current cob_perception_common release is not yet compatible with cob_people_detection. You might need to download and build it manually from https://github.com/ipa-rmb/cob_perception_common.git if you encounter build problems.

For beginners: go to your home folder, create a catkin workspace, download the code, install dependencies, build:

cd && mkdir -p catkin_ws/src && cd catkin_ws/src && catkin_init_workspace && cd .. catkin_make && cd src git clone https://github.com/ipa-rmb/cob_people_perception.git git clone https://github.com/ipa-rmb/cob_perception_common.git cd .. && source devel/setup.bash rosdep install --from-path src/ -y -i catkin_make -DCMAKE_BUILD_TYPE="Release"

Then install (http://wiki.ros.org/openni_launch) and start the Openni driver (old Kinect, Asus) with

roslaunch openni_launch openni.launch

or the Openni2 driver (new Asus, http://wiki.ros.org/openni2_launch) with

roslaunch openni2_launch openni2.launch

or any other driver according to your used sensor.

When using the openni or openni2 driver, please ensure that depth_registration is activated (e.g. by using rosrun rqt_reconfigure rqt_reconfigure). Also check that the camera_namespace argument and the camera topics colorimage_in_topic and pointcloud_rgb_in_topic, which are set in the ros/launch/people_detection.launch file, correspond to the topic names of your camera driver.

Then launch people detection with

roslaunch cob_people_detection people_detection.launch

or with

roslaunch cob_people_detection people_detection.launch using_nodelets:=true

The second version uses nodelets for the first stages of data processing which might yield a substantially better runtime if your processor is fast enough on single core usage.

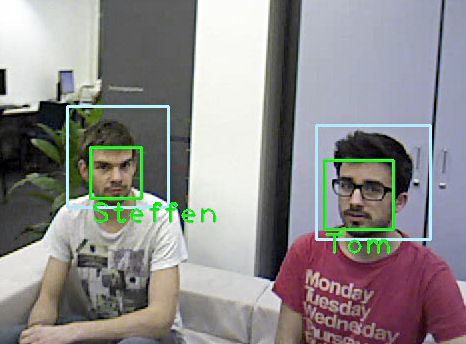

Now a window should pop up and present you with the current image of the camera. Heads will be framed with a light blue rectangle and detected faces are indicated in light green. For your convenience, the package contains a client for easy usage of people detection. Start the client with

rosrun cob_people_detection people_detection_client

No identification data will be available the first time you start the people detection node on your computer. To record some data adjust the frame rate of the camera, first, by choosing 5 - activate/deactivate sensor message gateway in the client and then enter 1 to activate the sensor message gateway. The frame rate should be chosen somewhere between 5 and 30 Hz depending on your computer's power.

Choose an option: 1 - capture face images 2 - update database labels 3 - delete database entries 4 - load recognition model (necessary if new images/persons were added to the database) >> 5 - activate/deactivate sensor message gateway << 6 - get detections q - Quit Type 1 to activate or 2 to deactivate the sensor message gateway: 1 At which target frame rate (Hz) shall the sensor message gateway operate: 20 Gateway successfully opened.

Now select 1 - capture face images from the menu of the client and enter the name of the first person to capture. Please do not use any whitespaces in the name. Following, you are asked to select how to record the data: manually by pressing a button or automatically. In the manual mode, you have to press c each time an image shall be captured and q to finish recording. Make sure that only one person is in the image during recording, otherwise no data will be accepted because the matching between face and label would be ambiguous.

Choose an option: >> 1 - capture face images << 2 - update database labels 3 - delete database entries 4 - load recognition model (necessary if new images/persons were added to the database) 5 - activate/deactivate sensor message gateway 6 - get detections q - Quit Input the label of the captured person: ChuckNorris Mode of data capture: 0=manual, 1=continuous: 0 Recording job was sent to the server ... Waiting for the capture service to become available ... [ INFO] [1345100028.962812337]: waitForService: Service [/cob_people_detection/face_capture/capture_image] has not been advertised, waiting... [ INFO] [1345100028.985320699]: waitForService: Service [/cob_people_detection/face_capture/capture_image] is now available. Hit 'q' key to quit or 'c' key to capture an image. Image capture initiated ... image 1 successfully captured. Image capture initiated ... image 2 successfully captured. Image capture initiated ... image 3 successfully captured. Image capture initiated ... image 4 successfully captured. Finishing recording ... Data recording finished successfully. Current State: SUCCEEDED Message: Manual capture finished successfully.

In the automatic recording mode you have to specify the number of images that shall be collected and the time between two recordings. We recommend to capture between 40 and 100 images per person. Make sure to take snapshots from different perspectives and under different illumination conditions (shadows on your face may look very different!).

Choose an option: >> 1 - capture face images << 2 - update database labels 3 - delete database entries 4 - load recognition model (necessary if new images/persons were added to the database) 5 - activate/deactivate sensor message gateway 6 - get detections q - Quit Input the label of the captured person: ChuckNorris Mode of data capture: 0=manual, 1=continuous: 1 How many images shall be captured automatically? 50 What is the desired delay time in seconds between two recordings? 0.8 Recording job was sent to the server ... Data recording finished successfully. Current State: SUCCEEDED Message: Continuous capture of 50 images finished successfully.

If you are using one of the more modern recognition methods (choices 2=LDA2D or 3=PCA2D, can be set in file cob_people_detection/ros/launch/face_recognizer_params.yaml as parameter recognition_method) then please be aware that they require at least two different people in the training data. The next step, building a recognition model, will not start with only one person available with these algorithms. If you quit the program before recording a second person, the program might not start anymore. Then please delete all data from ~/.ros/cob_people_detection/files/training_data, start the program and record two people.

After training, you need to build a recognition model with the new data. To do so, just select 4 - load recognition model from the client's menu. In the following prompt you can either list all persons that you captured and that shall be recognized by the system, e.g. by typing

Choose an option: 1 - capture face images 2 - update database labels 3 - delete database entries >> 4 - load recognition model (necessary if new images/persons were added to >> the database) << 5 - activate/deactivate sensor message gateway 6 - get detections q - Quit Enter the labels that should occur in the recognition model. By sending an empty list, all available data will be used. Enter label (finish by entering 'q'): ChuckNorris Enter label (finish by entering 'q'): OlliKahn Enter label (finish by entering 'q'): q Recognition model is loaded by the server ... A new recognition model is currently loaded or generated by the server. The following labels will be covered: - ChuckNorris - OlliKahn The new recognition model has been successfully loaded. Current State: SUCCEEDED Message: Model successfully loaded.

or you can directly enter q and all available persons will be trained.

After all steps succeeded, you can watch the recognition results in the display. Although you may access all training data functions (update, delete, etc.) from the client directly you may also access the files directly, which are located in your home folder at ~/.ros/cob_people_detection/files/training_data.

Detailed Documentation

Overview

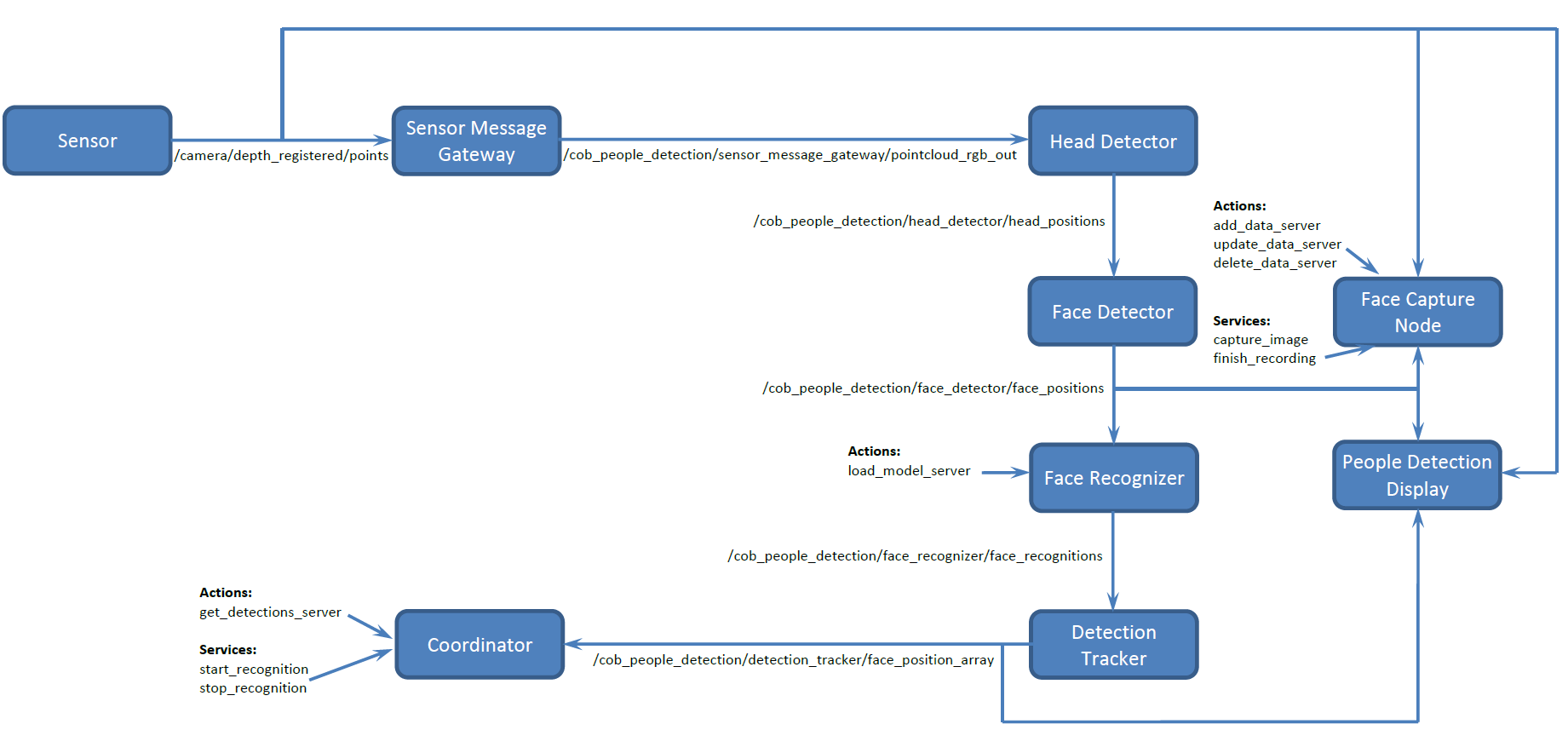

The cob_people_detection pipeline contains the steps head detection, face detection, face recognition and detection tracking. Around the pipeline there exist several nodes that provide the sensor data and control the behaviour. The Coordinator node is the interface between cob_people_detection and other programs. This node can open or close the data gateway to the recognition pipeline by adjusting the behaviour of the Sensor Message Gateway node. Furthermore, the Coordinator can handle requests for detections in form of an action. The purpose of the Sensor Message Gateway node is to pass sensory data at a defined rate, which might be zero, i.e. no messages are forwarded to the pipeline.

The first step of person recognition is the Head Detector node which detects heads in the depth image of RGB-D data. Following, the Face Detector determines the locations of faces within these head regions. Then the Face Recognizer identifies the name of each detected face. The recognition results are finally collected by the Detection Tracker and published as a topic /cob_people_detection/detection_tracker/face_position_array that can be subscribed to if continuous detection results are desired. If detection of faces is supposed to happen only on demand, use the get_detections_server action of the Coordinator.

The Face Capture node facilitates learning of new persons or the modification of training data. The action add_data_server realizes the addition of new training data. After the capture of new training data the recognition model has to be rebuilt to incorporate the new data. To do so, call the action load_model_server of the Face Recognizer after recording new images. The People Detection Display node can be used to display the results in a live image and to verify which data is recorded during training.

People Detection Client Interface

The people detection client is a convenient interface to all functions of the people detection software. Its source code is furthermore a good place to learn the C++ interface of people detection if it shall be called directly from your software.

The menu provides you with the following functions:

Capture face images records images for the face recognition component. Its modes of operation have already been discussed in the Quick Start section.

Update database labels updates the label of training data items. The entries that shall be updated can be selected either by index or by label.

Delete database entries deletes data from the training set. The data is selected either by index or by label.

Load recognition model This function loads or trains a recognition model for the desired compilation of people from the training set. See example in the Quick Start section. It is necessary to run this function whenever new training data has been recorded and shall be used.

Activate/deactivate sensor message gateway controls the gateway which passes sensory data at a given rate to the pipeline.

Get detections is an example for the action interface to the results. The action can also be called when the gateway is closed - then it will be automatically opened for a short time until the detections are received. The other way of accessing the detection results is to simply open the gateway and subscribe to the /cob_people_detection/detection_tracker/face_position_array topic.

ROS API

The parameters, topics, services and actions provided or needed by the single nodes of people detection are described here.Action Goal

Coordinator Node

- action that handles requests for people detections

Face Capture Node

- action server that handles requests for adding data to the data base

- action server that handles requests for updating labels of data base entries

- action server that handles requests for deleting data from the data base

Face Recognizer

- action server that handles load requests for a new recognition model

Subscribed Topics

Sensor Message Gateway

- colored point cloud from RGB-D sensor

Head Detector

- colored point cloud from sensor message gateway

Face Detector

- array of the detected heads, includes bounding box, color and depth image

Face Recognizer

- array of the detected heads and faces, includes bounding boxes of heads and faces, color and depth images

Detection Tracker

- array of the detected heads and faces, includes bounding boxes of heads and faces and 3D coordinates

- image of segmented persons

Coordinator Node

- array of the detected heads and faces after tracking, includes bounding boxes of heads and faces and 3D coordinates

Face Capture Node

- colored image from RGB-D sensor

- array of the detected heads and faces, includes bounding boxes of heads and faces, color and depth images

People Detection Display Node

- colored image from RGB-D sensor

- array of the detected heads and faces, includes bounding boxes of heads and faces, color and depth images

- array of the detected heads and faces after tracking, includes bounding boxes of heads and faces and 3D coordinates

Published Topics

Sensor Message Gateway

- colored point cloud from RGB-D sensor, publishing rate slowed down

Head Detector

- array of the detected heads, includes bounding box, color and depth image

Face Detector

- array of the detected heads and faces, includes bounding boxes of heads and faces, color and depth images

Face Recognizer

- array of the detected heads and faces, includes bounding boxes of heads and faces and 3D coordinates

Detection Tracker

- array of the detected heads and faces after tracking, includes bounding boxes of heads and faces and 3D coordinates

Services

Coordinator Node

- service that starts the recognition pipeline by opening the sensor message gateway

- service that stops the recognition pipeline by closing the sensor message gateway

Face Capture Node

- service server that triggers an image recording, only active during handling of add_data_server action when capturing in manual mode

- service server that finishes image recording, only active during handling of add_data_server action when capturing in manual mode

Parameters

Global parameters

Parameters, valid for whole package.- directory where all data is stored

Coordinator Node

Paramter file ros/launch/coordinator_params.yaml .- namespace of the pipeline's sensor message gateway

Sensor Message Gateway

Paramter file ros/launch/sensor_message_gateway_params.yaml .- rate at which the input messages are published (in Hz), also available with dynamic_reconfigure, which might overwrite the parameter from the launch file

Head Detector

Paramter file ros/launch/head_detector_params.yaml .- this string allows to change the path to the Haar classifier model

- if enabled, invalid pixels of the depth image are interpolated by region growing of their neighbors

- the factor by which the search window is scaled between the subsequent scans

- minimum number (minus 1) of neighbor rectangles that makes up an object.

- minimum search scale x

- minimum search scale y

Face Detector

Paramter file ros/launch/face_detector_params.yaml .- this string allows to change the path to the Haar classifier model

- the factor by which the search window is scaled between the subsequent scans

- minimum number (minus 1) of neighbor rectangles that makes up an object.

- minimum search scale x

- minimum search scale y

- when this flag is activated, the 3d size of a face is determined and only reasonable sizes may continue in the processing pipeline

- the maximum feasible face diameter [m] if reason_about_3dface_size is enabled

- the minimum feasible face diameter [m] if reason_about_3dface_size is enabled

- maximum distance [m] of detected faces to the sensor

- enables some debug outputs

Face Recognizer

Paramter file ros/launch/face_recognizer_params.yaml .- this flag enables or disables the face recognition step

- this list specifies the persons who shall be recognized by the face recognizer, comment out this line to avoid any specification at startup

- parameter specifies the size of the images after the normalization process

- if true illumination normalization is performed during the normalization process

- if this is true, automatic head pose aligment is performed during normalization process

- if this is true, a normalization algorithm specialized for extreme illumination conditions is used during normalization

- if this is true, the threshold which distinguishes known from unknown people is used during classification

- enables some debug outputs

- This parameter allows the user to select the algorithm used for face recognition. Available algorithms are: 0 = Fisherfaces, 1 = Eigenfaces, 2 = LDA2D, 3 = PCA2D. Attention: choices 0 and 1 work with a single person in the training data, options 2 and 3 will cause a crash of the program if you do not train at least 2 different people. This will also happen if you stop the program after recording only one person.

Detection Tracker

Paramter file ros/launch/detection_tracker_params.yaml .- enables the combination of face detections with the openni people segmentation, not recommended as the people segmentation is supposed to work with a fixed camera (not moving) and crashes regularly on mobile robots, if activated do not forget to start the segmentation node in the detection_tracker.launch file

- timespan during which a face is preserved in the list of tracked faces although it is currently not visible

- the minimum area inside the face rectangle found in the color image that has to be covered with positive people segmentation results (from openni_tracker), only effective if segmentation is used

- the minimum area inside the head rectangle found in the depth image that has to be covered with positive people segmentation results (from openni_tracker)

- maximum tracking distance for a face (in meters), i.e. a face can move this distance between two images and can still be tracked

- face identification score decay rate (0 < x < 1), i.e. the score for each label at a certain detection location is multiplied by this factor

- minimum face identification score to publish (0 <= x < max_score), i.e. this score must be exceeded by a label at a detection location before the person detection is published (higher values increase robustness against short false detections, but consider the maximum possible score max_score w.r.t. the face_identification_score_decay_rate: new_score = (old_score+1)*face_identification_score_decay_rate --> max_score = face_identification_score_decay_rate/(1-face_identification_score_decay_rate))

- if this is true, the unknown label will be assigned for the identification of a person if it has the highest score, otherwise, the last detection of a name will display as label even if there has been a detection of Unknown recently for that face (i.e. if true: face names will display only while they are certain, if false: face names will always display after they have been certain once)

- enables some debug outputs

- if this is true, rosbag mode is activates. Timestamps of input data is ignored as it is expected to stem from rosbag files. The setting of the parameter face_redetection_time is irrelevant if this is true.

Support

Please consult ros answers to see if your problem is already known.

Please use the mailing list for additional support or feature discussion.

References

If you are using this software in your work, please cite the Humanoids paper from 2013.

- Ninghang Hu, Richard Bormann, Thomas Zwölfer, and Ben Kröse, "Multi-User Identification and Efficient User Approaching by Fusing Robot and Ambient Sensors," in Proceedings of the IEEE Conference on Robotics and Automation (ICRA), 2014.

- Richard Bormann, Thomas Zwölfer, Jan Fischer, Joshua Hampp, and Martin Hägele, "Person Recognition for Service Robotics Applications," in Proceedings of the 13th International IEEE-RAS International Conference on Humanoid Robots, 2013.

- Jan Fischer, Daniel Seitz, and Alexander Verl, "Face Detection using 3-D Time-of-Flight and Colour Cameras," in: Neumann, Kristin (Ed.) u.a.; International Federation of Robotics u.a.: Joint International Conference of ISR/ROBOTIK2010: Munich, 7-9 June 2010. Berlin; Offenbach: VDE-Verlag, 2010, S. 112-116.