| Note: This tutorial assumes that you have completed the previous tutorials: cob_tutorials/Tutorials/Startup simulation, cob_tutorials/Tutorials/Navigation (global). |

| |

Exploration of the environment while searching for objects

Description: This tutorials introduces a simple exploration task coordination example. The task of the robot is to move around in the environment and search for objects.Tutorial Level: INTERMEDIATE

Contents

Prerequisits

Startup simulation with robot.

Populate the environment with objects and start navigation and perception components

roslaunch cob_task_coordination_tutorials explore.launch robot:=cob4-2 robot_env:=ipa-apartment sim:=true

You should see the environment being populated with furniture and objects.

Start navigation

roslaunch cob_navigation_global 2dnav_ros_dwa.launch robot:=cob4-2 robot_env:=ipa-apartment

Run the exploration script

rosrun cob_task_coordination_tutorials explore.py

You should see the robot driving around in the environment to randomly selected target poses. As soon as the robot arrives there it will start to search for objects. If some objects could be found, the robot will announce their names and continue to drive to the next target pose.

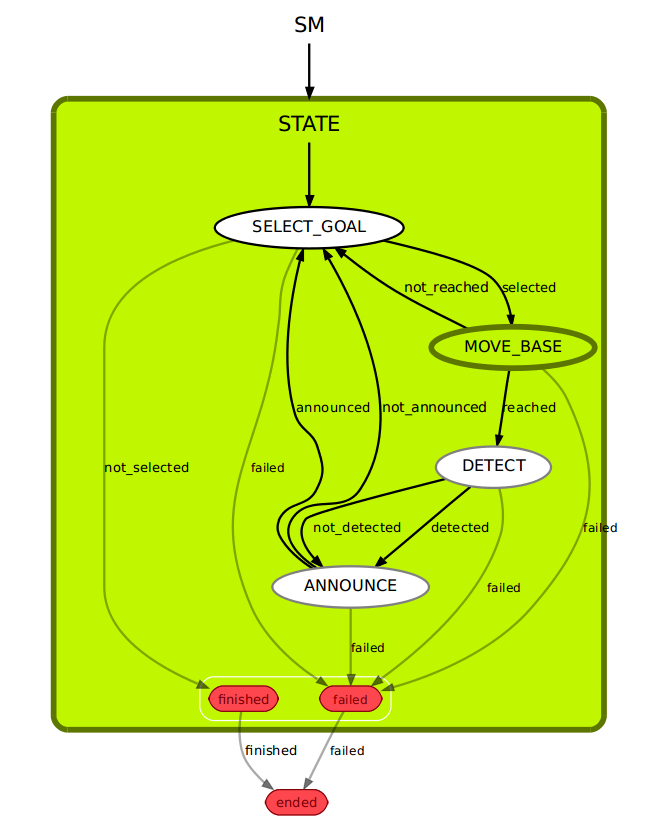

The image below shows the simple state machine which is active in this task.

If you want to visualise the current state you can use smach_viewer.

rosrun smach_viewer smach_viewer.py

You can use image_view to see what the robot camera is currently looking at.

rosrun image_view image_view image:=/cam3d/rgb/image_raw

Modify the task

Adjust the exploration behaviour

Your task is to adapt the exploration behaviour in a way that the robot doesn't move to randomly selected target poses, but for example:

- in a way that the robot goes around the table in a circle, always pointing towards the table.

- in a way that the robot goes around the table in a circle, always pointing towards the table.

- in a way that the robot moves on target positions along the walls, always pointing towards the walls.

To modify the behaviour you will need to adjust the SelectNavigationGoal state in the file cob_task_coordination_tutorials/scripts/explore.py.

1 def execute(self, userdata):

2 # defines

3 x_min = 0

4 x_max = 4.0

5 x_increment = 2

6 y_min = -4.0

7 y_max = 0.0

8 y_increment = 2

9 th_min = -3.14

10 th_max = 3.14

11 th_increment = 2*3.1414926/4

12

13 # generate new list of poses, if list is empty

14 # this will just spawn a rectangular grid

15 if len(self.goals) == 0:

16 x = x_min

17 y = y_min

18 th = th_min

19 while x <= x_max:

20 while y <= y_max:

21 while th <= th_max:

22 pose = []

23 pose.append(x) # x

24 pose.append(y) # y

25 pose.append(th) # th

26 self.goals.append(pose)

27 th += th_increment

28 y += y_increment

29 th = th_min

30 x += x_increment

31 y = y_min

32 th = th_min

33

34 # define here how to handle list of poses

35 #userdata.base_pose = self.goals.pop() # takes last element out of list

36 userdata.base_pose = self.goals.pop(random.randint(0,len(self.goals)-1)) # takes random element out of list

37

38 return 'selected'

Change the announcement of objects

Instead of just using text-to-speech, you can move the arm and torso, for example:

lift the arm if objects could be found using sss.move("arm","pregrasp") to lift the arm and sss.move("arm","folded") to bring the arm back to its folded position.

shake the torso if no object could be found using sss.move("torso","shake")

For that you will have to place the sss.move() commands in the AnnounceFoundObjects state in the file cob_task_coordination_tutorials/scripts/explore.py.

Change state transitions

You can also change the transitions between the states. Transitions are simply assigned names that link outcomes of one state to another state. For example you can

- let the robot only announce something in case it could detect something and

- let the task stop as soon as the robot detected something

Therefore you will have to edit the Explore class in the file cob_task_coordination_tutorials/scripts/explore.py.

1 smach.StateMachine.add('SELECT_GOAL',SelectNavigationGoal(),

2 transitions={'selected':'MOVE_BASE',

3 'not_selected':'finished',

4 'failed':'failed'})

5

6 smach.StateMachine.add('MOVE_BASE',ApproachPose(),

7 transitions={'reached':'DETECT',

8 'not_reached':'SELECT_GOAL',

9 'failed':'failed'})

10 smach.StateMachine.add('DETECT',DetectObjectsFrontside(['milk1','milk2','milk3','milk4','milk5','milk6','salt1','salt2','salt3','salt4'],mode="one"),

11 transitions={'detected':'ANNOUNCE',

12 'not_detected':'SELECT_GOAL',

13 'failed':'failed'})

14

15 smach.StateMachine.add('ANNOUNCE',AnnounceFoundObjects(),

16 transitions={'announced':'finished',

17 'not_announced':'SELECT_GOAL',

18 'failed':'failed'})

Have a look at the visualisation of the state machine using the smach_viewer to see the changes.