ROS for Human-Robot Interaction

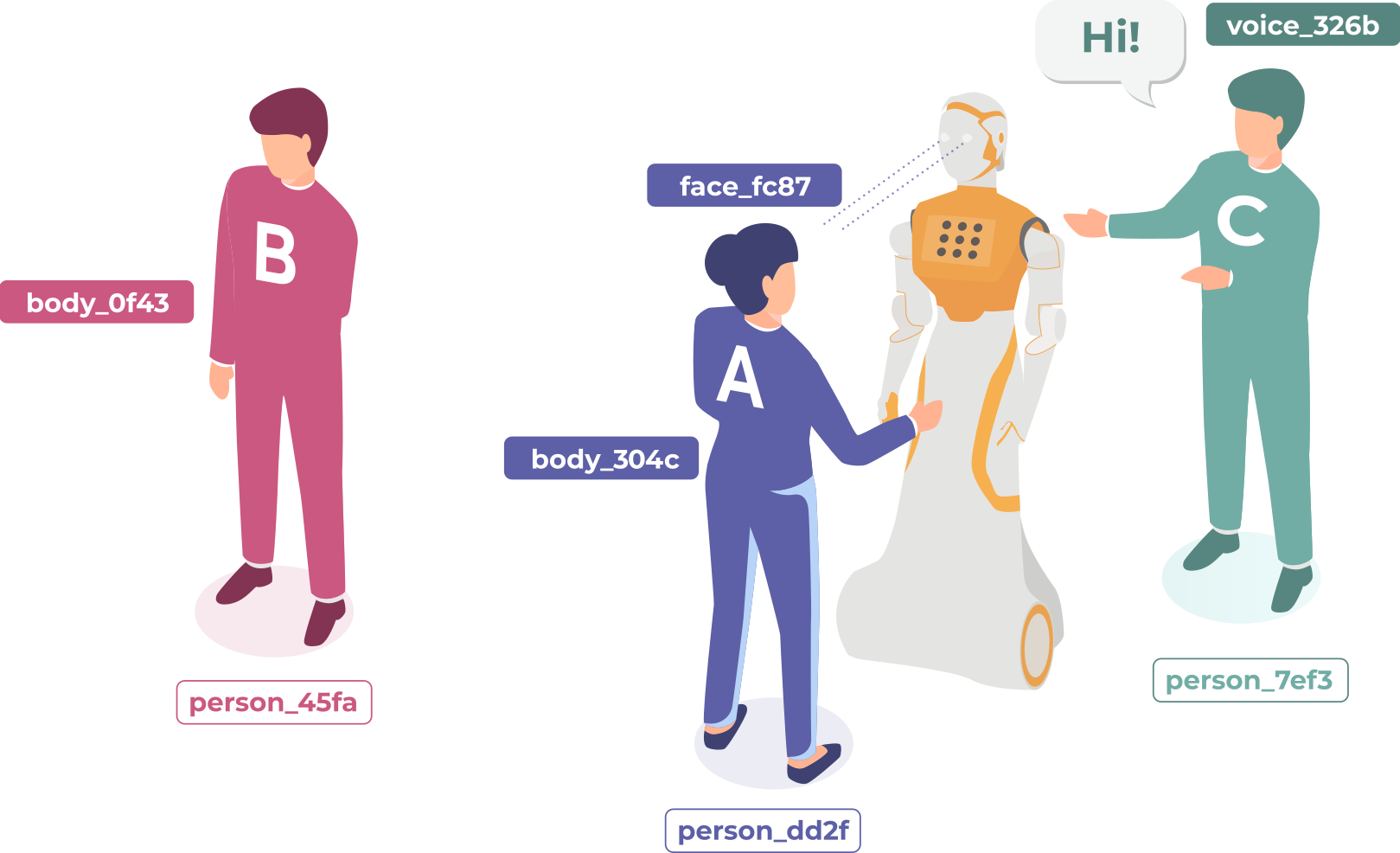

ROS for Human-Robot Interaction (or ROS4HRI) is an umbrella for all the ROS packages, conventions and tools that help developing interactive robots with ROS.

The ROS4HRI documentation (concepts, API, tutorials, etc) is maintained on a dedicated website: https://ros4hri.github.io